Deep Dive Into The Security for AI Ecosystem

Indepth review of enterprise adoption of LLMs, Security for AI evolutions and the security vendor landscape.

Today, we’re diving into how enterprises are securing their generative AI applications and LLMs after extensive months of discussions with security leaders and founders/CEOs. We’re exploring the key startups tackling this challenge. Our analysis focuses on enterprise AI adoption, a crucial factor that will shape the future of security vendors. I enjoyed collaborating with Zain Rizavi, a very thoughtful and smart VC at Ridge Ventures. If you’re a founder building in this space or a security leader evaluating solutions, please connect. The actionable summary below distills all the key insights you need in just a few minutes.

Actionable Summary

The Evolving Landscape of AI Security To Cloud Security: AI/LLM security is rapidly emerging as a crucial area within the broader cybersecurity landscape. The market, still in its infancy, mirrors the early days of cloud security, where multiple generations of vendors appeared, leading to eventual winners after much consolidation. However, unlike the cloud, AI’s rapid pace of development and lower barriers to entry suggest a potentially more accelerated evolution. This new market holds significant long-term potential, particularly as enterprises continue to adopt AI at scale.

Enterprise Adoption Varying: Enterprise adoption of AI/LLMs is growing, but many organizations are still in the experimental phase, carefully assessing the risks before large-scale deployment. Multiple datasets show that over 90% of IT leaders are prioritizing securing AI and over 77% have experienced a breach in the past year. However, “implementing these AI technologies” hasn’t been widespread due to uncertainties discussed and enterprises being wary of issues like data leakage, governance model hallucinations, and the complexities of managing autonomous AI agents. While some companies have opted to build their own internal solution, we’ve subsequently seen more and more companies focus on evaluating security for LLM vendors with the intent of buying next year. We discuss many of these vendors later in this report.

Not Every AI Is the Same: Differentiating between predictive and generative AI is essential, as they serve distinct purposes and require different security approaches. The latter presents more diverse security challenges due to its capability to create new content and simulate complex decision-making processes.

Vendor Landscape and Market Dynamics: The AI security vendor landscape is diverse, with companies focusing on various aspects of AI/LLM security, from governance and observability to specific security functions like data protection, model lifecycle, and runtime security. One interesting finding from our discussions is that many startups are taking a platform approach to provide a broad range of solutions that secure these LLMs rather than focusing on a niche. One that differs from how traditional security startups work.

Budget allocations for security for AI: Looking ahead, the AI security market is poised for growth, with 2025 expected to be a pivotal year as many enterprise AI experiments transition into large-scale deployments. The demand for AI security solutions is driven by regulatory pressures (Security vendors are capitalizing on the Biden’s AI executive order and European Union Act), the need to safeguard sensitive data and maintain the integrity of AI models. We also observed that CISOs/CIOs are not the only sole evaluators; we see an increase in wallet share from AI research and sometimes CDOs. For many companies, ACVs are hovering around $50K, all the way up to north of $200K for solutions.

Risks today vs. tomorrow: The biggest issues today revolve around enterprises' control over how data goes in and out of their enterprise and how their employees access data—whether PII or data necessary for their jobs. Shadow AI is real. CISOs and teams need visibility into what is being used across employees. Over the long term, we see security risks revolving around models in runtime.

Key assumptions: The market will be big enough for multiple winners over the long-term. However, startups need to evolve their solutions as quickly as the speed of innovation within AI is happening. Over the next few months, we might see market consolidation as vendors, but we conclude that overall, we believe the space is quickly evolving, and it's too early to determine clear market leaders. Once we fully understand the preferred enterprise LLM stack, the nature of cyber attacks then we’ll fully understand the role of security.

What To Expect: This report really focuses on understanding enterprise adoption of AI before delving into how companies are securing these models and the vendor landscape in this market.

Core insight into enterprise paradigm shift

AI Security is a new market with some historical patterns of existing cybersecurity markets. When looking back at previous major paradigm shift for enterprises like the cloud relative to cloud security vendors, we see that the evolution of cloud security saw multiple generations of companies (Dome9, Redlock before the likes of Sysdig and Wiz emerged)—many early entrants were acquired like Evident.io. The second generation (Orca, Aqua) fared moderately well and some died (Lacework). Still, it wasn’t until the third generation that we saw clear winners like Wiz, Palo Alto and Crowdstrike. Similarly, in the AI space, we expect to see a sequence of security vendors emerge. However, unlike the cloud, AI's rapid pace and lower entry barriers mean we might see a more accelerated evolution of security solutions for AI/LLMs.

To begin to define security for AI, it is essential to understand the “how.” The way AI models are developed, sourced, and deployed has undergone a significant transformation, introducing new layers of risk. Traditionally, organizations had a straightforward choice: build models in-house, use open-source models, or rely on third-party, hosted solutions. However, the rise of foundation models—massive, pre-trained models that can be fine-tuned for specific tasks—has shifted the landscape, leading to a widespread reliance on third-party models. This reliance introduces substantial security concerns. While open-source models offer flexibility and cost savings, they may also have hidden vulnerabilities. Even with safer formats, the potential for embedding malicious code within the model architecture itself remains a significant threat.

Quick shoutouts - Thank the partners who support the software analyst newsletter. Sponsors allow me to perform independent analysis and research on my cybersecurity industries and make this research readily available for everyone.

Lasso Security is a comprehensive LLM cybersecurity startup addressing evolving threats and challenges.

LayerX is an enterprise browser security company delivered as a lightweight extension aggregating all activity data for monitoring.

New Paradigm Shift for The Enterprise

It’s fair to say that ever since the release of ChatGPT in 2022, the world of AI has felt like a rollercoaster that isn’t stopping anytime soon. The initial surge of enthusiasm, fueled by the promise of AI-driven productivity, has simmered down, but the momentum is far from over. While most white-collar jobs remain intact, the hype around AI has evolved, with LLMs and AI capabilities beginning to weave themselves into everyday applications. Generative AI is no longer just a buzzword—it’s becoming a fundamental part of how we create, reason, and interact with technology.

Today, AI enables us to generate images, videos, texts, and audio, supplementing human creativity in ways previously unimaginable. More importantly, AI’s ability to engage in multi-step, agentic reasoning allows it to simulate human-like decision-making processes. This evolution marks a significant shift, one that mirrors a similar transformation in the tech landscape over the past two decades—the rise of the cloud.

A Parallel to the Cloud: Learning from Past Shifts

As we’ve discussed earlier in the piece, the last major shift in enterprise technology was the advent of the cloud, which revolutionized applications, business models, and the way people interact with technology. Just as the cloud replaced traditional software with a new model of service delivery, AI is now poised to replace services with software—a change that could have exponential implications. As with any platform shift, the lessons learned from previous generations are critical in navigating the current landscape. The cloud’s evolution taught us that while early movers may set the stage, but it often takes multiple generations for true winners to emerge.

This same principle applies to AI. The deployment of AI/LLMs within enterprises is a paradigm shift akin to the cloud’s impact. We are likely in the early stages, where the eventual leaders in AI security are still taking shape. As enterprises wrestle with decisions about deploying open vs. closed models, these are somewhat adjacent to how companies in the early 2010s thought they could host their own internal clouds, but eventually decided to use the hyperscalers. As new types of cyber threats emerge, we anticipate enterprises will adapt and so will the landscape of AI security will undergo several iterations before the most successful players are established.

The Double-Edged Sword of Innovation

With every new technological shift comes the double-edged sword of innovation. While AI holds immense potential, it also presents new opportunities for malicious actors. The cost of launching sophisticated, nation-state-like cyber attacks is plummeting, and AI’s capabilities are lowering the barriers to entry for attackers more than the cloud ever did. This reality underscores the urgent need for robust security measures and presents a fertile ground for both startups and established companies in the cybersecurity space.

The Next Generation of Security for AI

The analogy with cloud security serves as a roadmap for understanding the potential trajectory of AI security. Just as economies of scale in cloud infrastructure led to the dominance of tech giants like Amazon, Microsoft, and Google, we might see similar dynamics in AI, where the winners will be those who can scale and innovate rapidly. In the case of mobile technology, the early innovator (iOS) built a closed ecosystem, while Google quickly followed with an open ecosystem (Android) that consolidated the rest of the market. AI security could see a similar dichotomy between closed and open models, with the market consolidating around the most effective approach.

As we stand at the precipice of this new era, the landscape is murky, and the path forward is still being charted. However, one thing is clear: the evolution of AI security will be a critical determinant of how enterprises adopt and deploy AI technologies. Just as cloud security eventually matured and stabilized, we expect AI security to follow a similar trajectory—one that we will explore in depth throughout this report.

Broad Categorization of AI Security

AI will impact security in many ways. The two primary categorizations are AI in security and Security for AI. The categorization of companies across AI varies depending on the source. In general, a core similarity exists with all the companies in these areas:

Companies that scan model repositories and dependencies for sensitive data

Companies that act as proxies that sit in the network path and inspect for data along an enterprise’s network traffic

Companies that sit at the application layer as extensions or embedded as code help enforce data security and access right policies.

AI for Security

These are solutions used to automate security solutions across security operations and developers. Although it is not the focus of our article, we wanted to highlight it. It includes how we can leverage AI across a number of areas:

SOC automation: Using AI to improve task efficiency for SOC analysts.

AppSec: We can use AI to do better code reviews, manage open-source or third-party dependencies, and apply for security in applications.

Threat Intelligence: Improvement in AI can better help security teams with threat intelligence and automate manual areas of penetration testing and anomaly detection.

Security for AI

This is the focus of our article. It focuses on security AI/LLMs in production environments. Key shoutout to the team at Menlo Ventures for helping to inform our knowledge here. Broadly, many of the companies in the space fall into these categories:

Governance: They help organizations build governance frameworks, risk management protocols, and policies for implementing AI.

Observability: They monitor, capture, and log data from these AI models when they have been deployed in production. They help capture the inputs and outputs to detect misuse and give teams full auditability.

Security: They implement security of the AI models - specifically identifying, preventing, and responding to cyber attacks for AI models.

AI Access Controls:

Managing how employees securely access GenAI applications and secure enterprise AI applications

Pre-model building / training of data

Data Leak Protection and data loss prevention

Identifying PII Identification and redaction

Red teaming

Model building/lifecycle management

Model vulnerability scanning and monitoring

AI Firewall and network traffic inspection

Threat detection & response

Enterprise Adoption

Before diving into the role of security for AI, it's critical we understand how enterprises are deploying them today. The promise of Gen AI is its role to drive productivity and cut costs as companies incorporate technologies like LLMs and RAG.

Despite its promises and leaders' willingness to use AI to drive better results, many enterprises are still figuring out how to use it. Some organizations are further than the rest, but many are still in the experimental mode—evaluating its pros and cons before deploying it at a large scale.

[Idea] Predictive vs. Generative AI. It’s important to be clear that predictive and generative AI are different; both serve different purposes. Predictive AI focuses on analyzing existing data (trained labeled datasets) to make informed future predictions or decisions, such as recommending products. In contrast, generative AI goes beyond analysis to create entirely new content, like text, images, or music, that mirrors the patterns found in its training data. It is easy for people to mix it up.Enterprise Adoption of AI

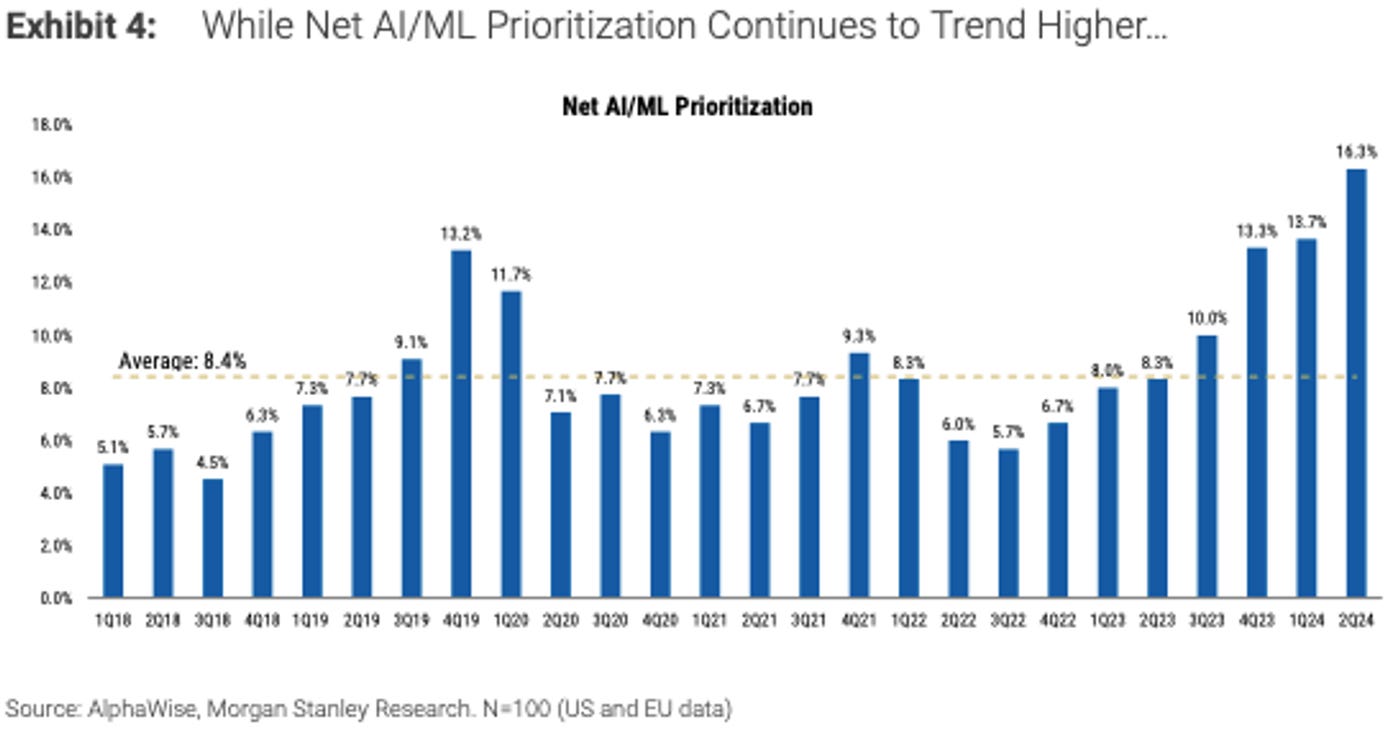

Morgan Stanley recently published some data in the report, “US Tech: 2Q24 CIO Survey - Stable Budgets, Nervous CIOs,” showing CIOs are prioritizing initiatives around Net AI/ML projects. As of 2Q24, Artificial Intelligence (AI)/Machine Learning (ML) was #1 on the CIO priority list, increasing in net prioritization (~16% of CIOs citing it as a top priority) from 1Q24. AI/ML realized the largest QoQ increase in prioritization in their 2Q24 survey. It is important to note that they are called generative AI. Although not surprising, the MS Q2 data underscores increasing CIO fervor surrounding Generative AI technologies. Additionally, 75% of CIOs now indicate direct impacts from Generative AI/LLMs to 2024 IT investment priorities, an uptick from 73% of CIOs in 1Q24 and 68% of CIOs in 4Q23).

Interestingly, despite CIOs prioritizing AI/ML, there is a moderation for the actual implementation of these AI/ML projects within their projects. They made it clear that they were waiting for Gen-AI spending to drive software budgets higher materially. A relevant quote is important to add: “At the same time, we await an innovation-driven spending cycle (driven by Generative AI) to drive Software budgets higher, but the timing of that cycle if anything may be getting pushed out given a lack of conviction in the reference architectures for how to build Generative AI solutions and the relative nascency of Generative AI solutions being built into packaged application offerings.

In general, most of the data from Morgan Stanley proves our hypothesis that while most enterprises want to drive AI initiatives, it will still be a long haul until complete execution and deployment. Based on our discussions, many companies believe that it will take them until 2025 before they have large implementations and establish spend for their products.

At this point, the benefits of Gen AI are clear. However, the risks are much bigger and unclear, making large-scale deployment much slower. Boston Consulting Group's (BCG) report of 2,000 global executives last year found that more than 50% actively discouraged GenAI adoption because of a wide variety of risk factors. Some of these risks center around security, governance, regulations, and outputs of these models.

Any enterprise moving fast to deploy AI today is likely faced with how they can deploy governance protocols around managing how sensitive data flows in and out - either for the customers they serve or internally. Companies have to manage how they enforce both internal policies and external regulatory frameworks around PII. Also, the cost of training models is still expensive, and some of the model outputs still produce hallucinations.

Enterprise Approaches to GenAI Tools

As most of this report has discussed, many enterprises have either opted to block access to GenAI tools such as ChatGPTentirely by blacklisting their URL within the organization's firewall. While this approach reduces risk, it may lead to productivity losses and does not address issues related to remote workers or unmanaged devices.

Risks Associated With GenAI Tools

Unintentional Data Exposure: Employees might inadvertently expose sensitive data by pasting it into ChatGPT, leading to unintended disclosures.

Malicious Insider Activity: A malicious insider could manipulate GenAI tools by feeding it sensitive data through carefully crafted questions, enabling data exfiltration when the same questions are asked from an unmanaged device.

Risky AI-powered Browser Extensions: These extensions can exfiltrate any data from any web application your users are using.

Enterprises are using browser extension security companies like LayerX to protect their devices, identities, data, and SaaS applications from web-based threats that traditional endpoint and network solutions may not address. These threats include data leakage, credential theft through phishing, account takeovers, and the discovery and disablement of malicious browser extensions, among others.

In the realm of generative AI, companies like LayerX play a crucial role. By deploying a browser security extension, they enable organizations to apply monitoring and governance actions within GenAI tools like ChatGPT. This includes blocking access, issuing alerts, and preventing specific actions such as pasting or filling in sensitive data fields. These controls help employees enhance productivity while safeguarding sensitive information.

The extension provides insights into both user-initiated and web page-initiated browsing events. This capability allows the extension to identify and mitigate potential risks before they materialize. Since GenAI tools are accessed via a web session, the extension can oversee and govern how users interact with it, including determining which actions are permitted based on the context. LayerX also integrates with other MDM, firewall, and EDR vendors to bolster browser security.

Enterprise Adoption of AI/LLM: Open vs. Closed Source Models

As we dive into understanding how enterprises will adopt AI/LLMs in the future, it's equally important to assess whether companies will adopt open or closed models, how that impacts the future, and the role of security. There is still much debate on whether open vs. closed-source models will be the leading models on the market. The major CSPs and AI companies are investing a lot of capital to win.

Closed-sourced LLMs are leading the way.

Today, the leading models are closed-source companies like ChatGPT-OpenAI, Claude by Anthropic, and gradually BERT/Gemini by Google. As of today, closed-sourced models like OpenAI are the leading model used by enterprises globally.

The recent Emergence Capital (“Beyond Benchmarks report”) estimates OpenAI with ~70-80% market share of LLM deployments today, with Google Gemini a distant second at ~10%. Enterprises often choose closed-source models like Open-AI because of their proven stability and performance at scale. These models come with comprehensive support, regular updates, and integration services, making them ideal for mission-critical applications. There is also Claude by Anthropic, which is focused on ethical AI is designed to be safer and more aligned with enterprises interested in ethical AI.

Closed-source models like OpenAI offer plug-and-play solutions with easy integration into existing enterprise systems. This can be appealing to enterprises looking for quick deployment without extensive in-house AI expertise.

Morgan Stanley’s research shows Microsoft is a significant beneficiary. They observed in their 2Q survey that 94% of CIOs indicate plans to utilize at least one of Microsoft's Generative AI products (Microsoft 365 Copilot & Azure OpenAI Services screening as the most popular) over the next 12 months, a significant uptick from 63% of CIOs in 4Q23 and 47% of CIOs in 2Q23. Looking ahead to the next three years, a similar 94% of CIOs indicated plans to use Microsoft's Generative AI products, which suggests this level of adoption should be durable.

Also, it is vital to note that Microsoft’s Security for LLMs for AI/LLM is gaining traction. According to Microsoft’s last earning call, over 1,000 paid customers have used Copilot for Security.

Open-source models

The popular ones today are LLaMA 2 by Meta and Mistral. There has been a debate about open-source models because they allow enterprises to customize and fine-tune the models to suit their specific needs. This flexibility is crucial for organizations with unique requirements or those that wish to differentiate their AI applications from competitors. The most significant benefit is that with open-source models, enterprises can deploy the models entirely within their infrastructure, ensuring complete control over data privacy and security. This is particularly important for industries with stringent data protection regulations.

Cost considerations – while the initial setup and expertise required to deploy open-source models may be higher, the long-term cost is generally lower as there are no ongoing licensing fees compared to closed-source model companies. Enterprises that have in-house AI expertise often prefer this route for its cost benefits.

Implications for cybersecurity: Closed vs open models

It is vital to consider the role of cybersecurity vendors in a world where either open or closed models take the most market share.

In an open-source world, cybersecurity vendors must account for the vast diversity in model deployments. Each enterprise may modify the model differently, requiring the vendor to offer adaptable and customizable security solutions. This adds complexity but also creates opportunities for niche solutions tailored to specific industries or enterprise needs. Open-source models provide full transparency into the model's architecture and code, allowing cybersecurity vendors to analyze and secure the model thoroughly. Since enterprises deploying open-source models often retain complete control over their data, vendors must focus on securing on-premises infrastructure and ensuring data privacy.

In a closed-source model world, closed-source models tend to have more standardized deployments across enterprises. This uniformity can simplify the development of security solutions. With closed-source models, vendors may have limited access to the model's internals, making it challenging to develop deeply integrated security solutions. They may have to rely on APIs or other interfaces provided by the model's owner, potentially limiting the depth of security measures that can be applied. There is also a question on whether cybersecurity vendors need to partner with Open-AI to indeed provide in-depth security or whether Open-AI would buy more in-depth security solutions.

Enterprise Risks & Attacks

The emergence of generative AI and large language models (LLMs) has played a pivotal role in bringing AI security and reliability to the forefront. As enterprises deploy AI/LLMs, they introduce a new set of risks and vulnerabilities that cyber attackers can exploit. Although these issues have existed for some time, they were often marginalized, with critics dismissing them as theoretical problems rather than practical concerns. The ambiguity surrounding the responsibility for AI security—whether it was a safety issue for security teams or an operational matter for machine learning (ML) and engineering teams—has now been resolved.

Blurring lines between the control and data plane

One of the more subtle yet profound challenges posed by AI, particularly generative models, is the convergence of the control plane and data plane within applications. In traditional software architectures, there is a clear separation between the code that controls the application and the data it processes. This separation is crucial for minimizing the risk of unauthorized access or manipulation of data.

Generative AI models blur this distinction by integrating control and data within the model itself. This integration often exposes inference processes directly to users, creating a direct link between user inputs and model outputs. Such exposure introduces new security vulnerabilities akin to the risks associated with SQL injections in conventional applications. An everyday use case is prompt injection attacks, where malicious users craft inputs to exploit model behaviors. These attacks are an emerging threat in the context of LLMs.

Moreover, the use of third-party models raises concerns about data security. Proprietary data, including sensitive information such as personally identifiable information (PII), is often fed into these models during inference, potentially exposing it to third-party providers with unknown or insufficient security practices. The risk of data leakage, whether through model providers misusing the data or through unintended outputs, underscores the critical need for rigorous data security measures in AI systems.

Classification of risks

The industry is working on standardizing the top risks and attacks, but so far, we haven’t experienced a significant breach that has redefined the industry. The most popular standards used across the industry are the OWASP Top 10 Risks for AI/LLMs or MITRE ATLAS. We’ll explore the other risks in more depth later.

There are prominent risks include membership inference attacks, model extraction attacks, evasion attacks, backdoor attacks, jailbreaking attacks, and reinforcement risks. At the most basic level, the biggest problem for many enterprises is managing how their enterprise data flows in and out as employees use chatbots and access GenAI applications, especially given the 44% growth in employee usage of GenAI apps.

However, moving forward, organizations will face large-scale risks —primarily around managing autonomous agents and LLMs in production. One of the best ways to understand the risk factors with AI is by looking at the entire supply and where to develop a model. Google created the AI security framework. Similar to how we think of risks across the software supply chain, enterprises face different types of attacks across the ML supply chain.

Similarly, but at a broad level. We can evaluate the risks of ML supply chain attacks. Models can be manipulated to give biased, inaccurate, or harmful information used to create harmful content, such as malware, phishing, and propaganda used to develop deepfake images, audio, and video leveraged by any malicious activity to provide access to dangerous or illegal information.

Adversarial & Model Inversion Attacks

These are sophisticated forms of cyberattack in which the attacker subtly manipulates input data to deceive an AI model into making incorrect predictions or decisions. These attacks take advantage of the model’s sensitivity to specific features within the input data. By introducing minor, often imperceptible changes—such as altering a few pixels in an image—the attacker can cause the AI model to misclassify the data.

For example, in an image recognition system, a carefully crafted adversarial attack might result in a model incorrectly identifying a stop sign as a speed limit sign, potentially leading to dangerous real-world consequences. These types of attacks are particularly concerning in critical applications like autonomous vehicles, healthcare diagnostics, and security systems, where the reliability and accuracy of AI models are paramount. The ability of adversarial attacks to subtly undermine AI models without obvious indicators of compromise highlights the need for advanced defensive strategies, such as adversarial training and robust model evaluation techniques.

Model Inversion is an attack that enables adversaries to reconstruct sensitive input data, such as images or personal information, by analyzing an AI model's outputs. This poses serious privacy risks, particularly in applications like healthcare and finance. Protecting against this requires careful management of model exposure and output handling.

Data Poisoning

It is another significant threat to AI systems, involving the deliberate injection of malicious or misleading data into the training dataset of an AI model. The goal of this attack is to corrupt the model’s learning process, leading to biased or incorrect outcomes. For instance, if an AI model is designed to detect fraudulent transactions, an attacker could introduce poisoned data that falsely labels certain fraudulent activities as legitimate. As a result, the model may fail to detect actual fraud when deployed in a real-world environment. The danger of data poisoning lies in its ability to compromise an AI model from its foundational level. Once a model has been trained on poisoned data, the inaccuracies and biases are deeply embedded, making it challenging to identify and rectify the issue. This type of attack is particularly insidious because it can remain hidden until the model is deployed, by which time the damage may already be significant.

Model outputs

Generative models, by their very nature, can produce an almost limitless range of outputs. While this flexibility is a key strength, it also introduces a vast array of potential challenges. The evaluation for such a model is relatively straightforward, focusing on categorical correctness within a narrow scope.

In contrast, the evaluation of generative AI models is far more complex and multifaceted. It’s not sufficient to merely determine whether the model’s output is technically correct. Stakeholders must also assess the output's safety, relevance to the context, alignment with brand values, potential for toxicity, and risk of generating misleading or "hallucinated" information. This complexity makes the task of ensuring the reliability of AI systems both challenging and nuanced, requiring a broader and more sophisticated evaluation approach.

The performance evaluation of discriminative models is typically straightforward. It relies on well-established statistical metrics to quantify how accurately the model’s predictions align with known outcomes. These models, often trained on labeled datasets, allow for a clear, objective assessment of performance based on historical data.

However, generative models, such as those used in chatbots and other natural language processing (NLP) applications, present a different set of challenges. The quality and appropriateness of their outputs are not only multi-dimensional but also highly subjective. What is considered "good," "safe," or "useful" can vary significantly depending on the context, audience, and individual preferences.

Enterprise Adoption of AI Security Tools

Throughout our research, we spoke with several CISOs, CIOs, and buyers who were involved in assessing AI security solutions. We sensed that the current adoption – while still nascent – is starting to mature. The majority of the CISOs are purchasing new Generative AI solutions (increasingly) based on the following:

First, this is still a buy-compared-to-build solution, as most of the in-house AI talent is allocated to developing models or LLMs. At the same time, simultaneously, security teams need to be equipped to create a solution. This includes not only security but also teams where there are cross-functional overlaps, such as data, BI, or even new Generative AI teams.

Security aspects

Second, organizations and enterprises are trying to ensure that the employees and data are currently secure. Here, we see the traditional methodology borrowing the ZTNA framework, including DLP, CASB, and some SWG-like solutions acting as the proxy between what employees are using directly (interfacing with ChatGPT) in addition to using a SaaS-based tool that currently leverages AI (e.g., Canva). Simply, AI in/egress. When we asked these leaders how they’re deciphering the best solution among the many start-ups competing in the space, it usually is a mixture of the following:

It needs essential visibility into who is using what at all times

To ensure that no sensitive data is shared with an external AI/LLM platform

There is the basic RBAC for who has access to what (branded as Shadow AI).

Thirdly, enterprises are starting to buy AI security solutions ahead of actual need. While this sounds counter-intuitive, we heard from several public company CISOs that the board/shareholders are demanding that we have AI Guardrails and security measures in place. The analogy we like to use: It’s almost like hiring a set of bodyguards to protect a blank canvas, but the general belief is that once the canvas is painted, it’s nearly going to be a Picasso or a Mona Lisa. Relatedly, we’ve heard that more significant, more sophisticated enterprises will require companies to have an “AI-SBOM,” which is a detailed inventory or list of all components, dependencies, and elements used in creating and deploying AI or LLMs. In the context of AI, the SBOM includes the following:

Data Sources: The datasets used to train the AI model, including metadata about where the data was sourced, how it was processed, and any biases or limitations.

Algorithms and Models: The specific machine learning algorithms, models, or frameworks (e.g., TensorFlow, PyTorch) used in the development process, including versions and configurations.

Software Libraries and Dependencies: All software libraries, packages, and dependencies that the AI system relies on, including their versions.

Training Processes: Details about the training processes, including the environment, resources, and any specific hyperparameters used.

Hardware Dependencies: If the AI system requires specific hardware (e.g., GPUs), this would also be included in the SBOM.

Security and Compliance Information: Information related to security vulnerabilities, compliance with regulations, and any certifications or audits conducted on the AI system.

A subset of sophisticated customers was the first movers with respect to adopting AI. We spoke with one customer who created one of the first customer-facing chatbots using AWS and LangChain. They currently use a Generative AI security solution that sits in front of the chatbot (via API) to ensure that no sensitive information is being shared externally. In the same vein, buyers are getting smarter about evaluating the efficacy of ensuring that no sensitive information is leaked. Here, companies (which we’ll cover below) are also applying their LLMs in addition to constant fine-tuning to ensure that the efficacy is high (>90% passes POCs). At the same time, latency is low (no good metric today). Related to fine-tuning, we’re gathering from CISOs/CIOs and buyers – knowing outright that this fits in the buy category, they’re starting to ask providers how much adversarial testing has gone through their models and what results, if any, they can share. Some sectors are leveraging these providers: Government, Financial Services, Automotive, Oil/Energy, Travel, and Tech/e-commerce. Across all of the sectors, there tend to be user cases centered around model security, perimeter defense, and user security. We’ve seen the travel industry become one of the faster movers to adopt chat agents. Hence, adopting an AI security provider is imperative.

While it’s easy to be disparaging about how accurate the Chatbot use case is for the long-term of the industry, it’s a clear indication that there is an appetite for using a holistic solution that protects employees, sensitive data, and customer/internal-facing AI applications. The next clear sign of evolution for AI security is protecting agentic applications. Helping enterprises to safely develop and deploy their first non-determining computing applications that go beyond their first chatbots feels like a huge opportunity and, by far, is the most premature today. We are starting to see some companies take this leap, such as Apex Security, with their new AI agent's risk and mitigation services.

We spoke with Ashish Rajan of the AI cybersecurity security podcast who shared some similar thoughts on the current challenges:

Currently, the current AI Security has the following challenges for CISOs & Practitioners.

For CISOs, the challenges:

AI Risk Education: CISOs need to become more educated on the risks associated with using AI, particularly around third-party risks, as most language models are provided by external vendors.

Visibility of AI and Data: AI is challenging traditional data classification practices, leading to concerns about data sprawl and access. New conversations are emerging around "shadow AI" and the use of open-source AI within organizations.

For cybersecurity practitioners, the challenges are twofold:

Understanding the Threat Landscape: Practitioners face difficulties understanding the evolving AI threat landscape and determining the necessary security controls. These include:

Known Security Requirements: These include establishing data policies, ensuring encryption, managing access control, and securing APIs, particularly as AI interactions primarily occur through APIs.

Unknown Security Risks: Concerns exist about third-party services, such as data warehouses used in AI training, and the potential for misaligned focus within AI security efforts.

Security Vendor Landscape Discussion

We found Gartner’s latest report on AI Impacts on cybersecurity and the CISO to be the most comprehensive look at the relevant attack surfaces that exist in enterprises today. Using the framework from Gartner, we slotted the relevant companies securing the surface area in accordance with their segments.

Below, we discuss all the companies across the different categories:

Governance

Governance is a crucial first step in implementing AI security for enterprises. It establishes robust frameworks that define roles, responsibilities, and policies, ensuring compliance with regulations and ethical guidelines while promoting transparency and explainability of AI models. IT risks in AI governance encompass both traditional vulnerabilities and new considerations introduced by generative AI, such as IP infringement and toxic outputs. The proliferation of AI regulations globally, with over 58 countries presenting their own approaches, further complicates this landscape.

Effective AI governance requires a two-pronged strategy: 1) Gain a comprehensive understanding of GenAI usage across the organization, and 2) Develop and implement well-defined policies to map and mitigate associated risks. This involves tracking AI licenses, identifying internal AI projects, and monitoring third-party AI integrations to create a secure AI ecosystem.

Implementing access rights: Knostic AI

There are vendors focused on helping companies implement governance around access rights and controls across their SaaS apps and non-human identities. Solutions from Knostic ai, Antimatter.io, and Protopia AI are crucial for ensuring proper access configurations for large language model data and maintaining robust data security. For example, Knostic is a provider of need-to-know-based access controls for LLMs. With knowledge-centric capabilities, Knostic enables enterprises to securely adopt LLM-powered enterprise search and chatbots by addressing the problem of oversharing data. When customers roll out LLM-powered enterprise search tools such as Copilot for M365, business leaders are surprised when the search tools provide anyone answers to sensitive questions such as "What are people's salaries?" and "What are the most recent M&A due diligence results?" Knostic ensures employees can access the knowledge they need, but only within their need-to-know boundary.

There are several vendors within compliance and governance, including Arthur.ai, Askvera, Fairnow, Cranium, Credo AI, Holistic AI, and Knostic.

Model Lifecycle (Build To Runtime)

Many vendors have emerged as wanting to provide end-to-end visibility across every aspect of the AI/LLM lifecycle (ie. covering testing, evaluation, performance monitoring), especially across multi-model environments. Examples include Prompt Security + Portal26 + Lasso Security + Troj.AI + Hidden Layer + Robust Intelligence. Below we take one vendor as a sample case study.

Example of An End-to-end AI Security Solutions

Lasso Security

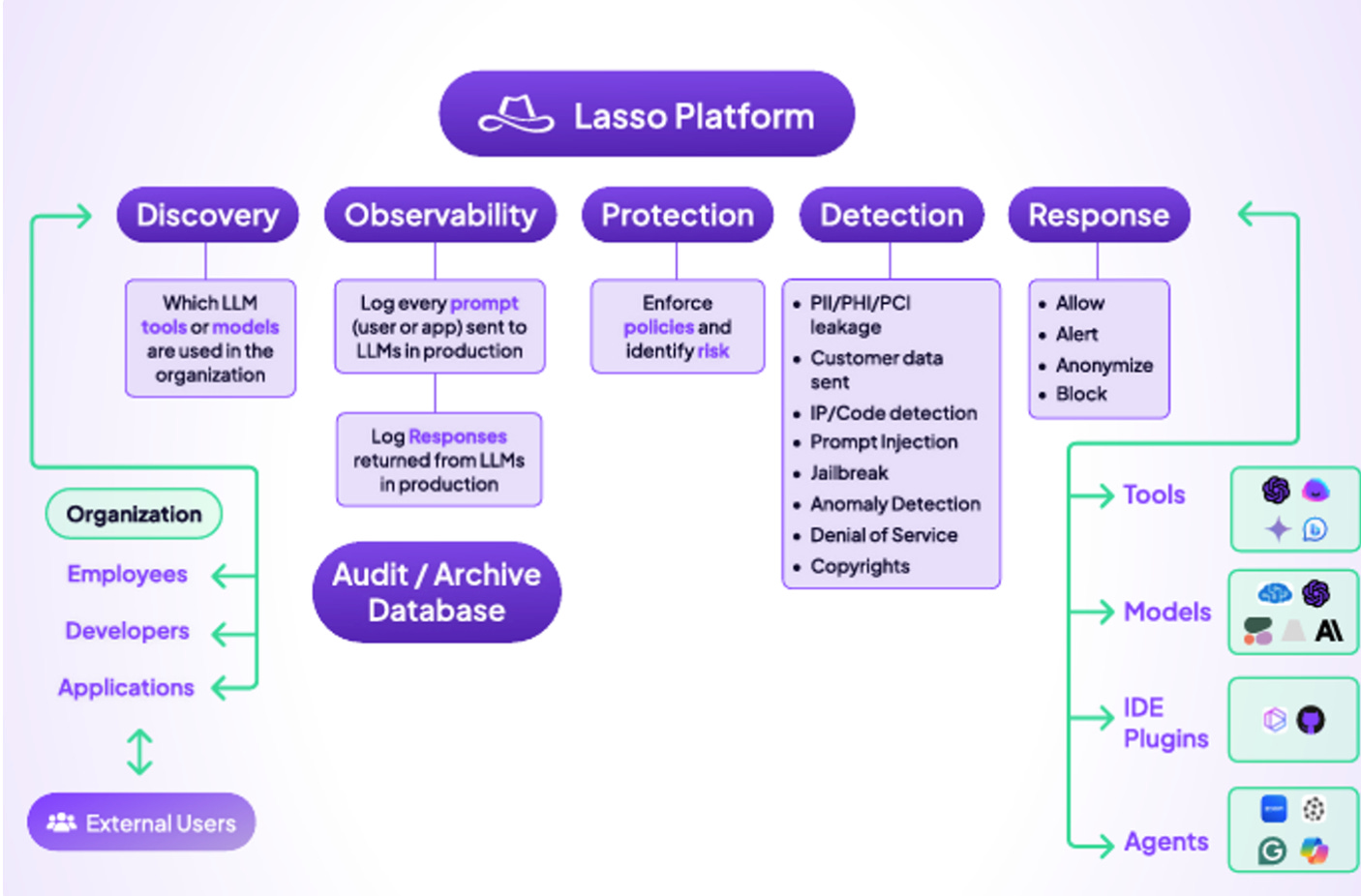

Lasso Security is a LLM/AI/GenAI security company that emerged from stealth in November 2023. Lasso Security’s approach centers on its ability to operate between LLMs and a variety of data consumers, including internal teams, employees, software models, and external applications. By monitoring every interaction point where data is transferred to or from an LLM, Lasso can detect anomalies and policy violations, regardless of the deployment methods used by the organization. This monitoring capability is part of a broader platform approach that spans from discovery and observation to detection and response, providing comprehensive coverage across the GenAI/LLM stack.

Platform Approach

Lasso is taking a platform approach to solving the problem. They want to build a platform that covers many areas of the GenAI/LLM stack. Their platform is structured around several key components:

Discovery: The Shadow AI discovery feature identifies and manages AI assets within an organization.

Monitoring & Observability: Continuous monitoring of LLMs and data flow to ensure observability.

Protection: Identifying the risk the organization has and applying relevant policies to mitigate and control these risks.

Detection: Real-time detection and alerting systems designed to protect against threats, such as PII/IP risks for employees and injection attacks in applications, powered by an ensemble of classifiers and AI or LLM models that can address different business use cases

Response: End-to-end real-time dynamic response that aligns with organizational security policies.

Lasso Security’s approach emphasizes the importance of a unified platform that integrates seamlessly with existing tools and processes rather than relying on a fragmented array of security solutions. This platform approach is mainly focused on runtime protection, addressing the real-time challenges that arise when AI/LLM models are deployed in production environments. Lasso Security identifies this area as one of the significant risks where traditional security mechanisms may be inadequate.

One of the core components of Lasso Security’s offering is its Context-Based Access Control (CBAC), which allows customers to set up knowledge-based access control management and sensitive data leak prevention with only a few clicks. This replaces the old methodology of relying on pattern or attribute-based access control, which got broken in the world of GenAI. This allows organizations to harness the full power of Retrieval-Augmented Generation (RAG) while maintaining stringent access controls.

Supporting multiple deployment methods

They have designed their platform to be flexible and adaptable, supporting various classifiers, including basic ones (e.g., regex-based), natural-language-based, context-based (powered by LLMs and SLMs), and custom models that are fine-tuned for specific business needs. This adaptability extends to the platform’s ability to function across multiple deployment methods, such as secured gateways, browser extensions, and IDE plugins. By leveraging API access to classifiers, Lasso enhances its detection capabilities, making the platform a valuable asset for organizations aiming to secure their AI deployments.

In the broader context of industry practices, Lasso Security is actively involved in regulatory discussions, contributing to the development of guidelines and standards that align with the evolving landscape of AI security. Their engagement with regulators reflects a commitment to ensuring that industry practices are in step with legislative requirements, particularly as the use of AI and LLMs becomes more pervasive across various sectors.

In summary, Lasso Security is developing a comprehensive platform designed to address the security challenges associated with AI and LLM deployments. Their focus on runtime protection through diverse technology interception points - from firewall, to browser extension and IDE plugin - positions them as a key player within the AI security market. As the market and real-world AI deployments rapidly change (hence the brand name - a Lasso to control the AI wild wild west), Lasso has built a strong infrastructure foundation to ensure they can support their customers' AI environments as they adapt.

HiddenLayer

HiddenLayer has emerged as a popular vendor for secure AI models throughout the MLOps pipeline. It provides detection and response capabilities for both Generative AI and predictive AI models, focusing on detecting prompt injections, adversarial attacks, and digital supply chain vulnerabilities. HiddenLayer stands out with its agent-based security for AI platforms, including its AI model scanner and AI detection and response solutions. The platform effectively identifies security vulnerabilities across predictive and generative AI workloads. The company has prioritized becoming a form of “EDR” (Endpoint Detection and Response) solution that monitors, detects, and responds to attacks on AI models in runtime environments. Their solution can be deployed on-prem, SaaS or in a hybrid mode. This focus is reflected in the growing market traction of their managed AI detection and response product. Their approach is further strengthened by a comprehensive threat research and vulnerability database, which informs product development and decision-making. From our network of conversations, it's clear that HiddenLayer is gaining significant momentum in the sector, particularly due to its differentiation in efficacy and enterprise scalability.

Application Layer

Prompt Security are focused on building for the three use cases: securing employees (via browser), securing developers (via Agent), and LLM applications via SDK/proxy. We see the company as one of the early leaders in the life-cycle and AI application security modules.

Protect AI is one of the first movers in the space is building an end-to-end platform, starting with the application layer and continuing with full end-to-end model development. They focus on providing Zero trust in the models themselves and host red teaming capabilities.

Pillar security provides a unified platform to secure the entire AI lifecycle - from development through production to usage. Their platform integrates seamlessly with existing controls and workflows and provides proprietary risk detection models, comprehensive visibility, adaptive runtime protection, robust governance features, and cutting-edge adversarial resistance. Pillar's detection and evaluation engines are continuously optimized by training on large datasets of real-world AI app interactions, providing highest accuracy and precision of AI-related risks.

Troj.ai is focused on enterprises and delivered via software installed in a customer environment (on-prem or cloud). They are focused on enterprises building their own applications using any model (custom or off the shelf). Their solution particularly focuses on understanding the inputs and outputs coming from the model and securing them at runtime. They assess risk with models at build time (with pentesting) and protect them in runtime by analyzing the prompts to and from the model. A core premise behind their solution is that most enterprises want their models closer to the user and they don’t want their data leaving the enterprise.

Mindguard’s core strength lies within its AI red teaming platform that identifies risks, provides standardized assessments, and offers effective mitigations for both Generative and Predictive AI systems. They help run secure AI applications with compliance and responsibility at the forefront. Their solution can enable security teams to dynamically red team any AI model hosted anywhere – on public model hubs like HuggingFace, your internal platforms, or your favorite cloud provider.

Swift Security is focused on protecting three types of use cases: LLM applications, developers using AI, and LLM applications themselves. You can deploy Swift as an inline proxy; they’re also focused on inspecting data in motion for greater security.

Apex Security has architected the product to cover use cases ranging from data leakage (employees) to AI exploits and prompt injections, which typically happen at the application layer. The product today is currently based on an agentless architecture, and it can plug into many cloud environments quickly, which helps it bolster its detection engine.

Calypso is focused on securing the LLM, and AI models themselves rather than the employees. The company acts as a proxy via API between multiple LLMs and companies’ applications. It can deploy on-prem and in the cloud without interfering with latency and provides complete visibility.

Radiant AI sits in between the model layer and the application layer. While Radiant isn’t directly competing for security budget as they help teams identify outliers within the models for performance in addition to security, they see some pull into security due to their Model Gateway, which sits in between sandbox environments and AI models.

Liminal like many of its peers, Liminal focuses on securing employees' interactions with AI/LLMs via the browser in addition to desktop applications, explicitly helping companies deploy AI applications securely.

Unbound Security is focused on accelerating enterprise adoption of AI usage through comprehensive visibility and control of AI apps. It has a browser plugin to monitor employee usage of AI apps and an AI gateway for application-based interactions. Unbound solves for shadow AI by not just cataloging AI apps in use, but by proactively steering employees to IT sanctioned AI apps in each category. Unbound also performs Just-in-Time employee-led remediation when employees attempt sharing sensitive information.

Witness-AI focuses on the secure enablement of AI across the model lifecycle for large enterprises. They offer data security, observability, data loss prevention (DLP), redaction of PII, role-based access control (RBAC) over models, network traffic filtering, and policy enforcement. Witness provides three core capabilities

Witness Observe: Monitors and audits AI activity with full visibility into applications and usage, identifying potential risks.

Witness Control: Enforces policies on data, topics, and usage to ensure compliance and security.

Witness Protect: Secures data, networks, and people from misuse and attacks.

Their solution monitors how employees use AI on the network, specifically tracking the inputs and outputs of data, with a focus on filtering and managing data flow securely. Witness enforces its solution at the network layer without the need for agents, leveraging third-party proxy chaining through vendors like Palo Alto Networks, Zscaler, and Netskope. This allows them to operate independently across various network security setups. What sets Witness apart is their visibility into employee interactions with AI chatbots. They provide a catalog detailing how models are used, the data involved, and the intent behind employee interactions, offering valuable insights for enterprises.

Other players include AI Shield, Meta, Cadea, AppSOC, Credal, Encrypt AI, etc.

Data Privacy, Data Leaks Prevention, and Model Poisoning

Vendors like Antimatter, Gretel, Skyflow, BigID, Bedrock Security, and Nightfall AI are leading the charge in securing LLMs.

Enkrypt AI ensures that personally identifiable information (PII) is redacted before any prompt reaches an LLM, while DAXA enables business-aware policy guardrails on LLM responses.

Antimatter manages LLM data by implementing robust access controls, encryption, classification, and inventory management.

Protecto secures retrieval-augmented generation (RAG) pipelines, enforces role-based access control (RBAC), and assists in redacting PII.

For synthetic data protection, companies like Gretel provide API support to train GenAI models with synthetic data and validate use cases with high quality. Tonic, on the other hand, de-identifies datasets by transforming production data for training purposes while maintaining an updated pipeline of synthetic data.

The team at Jump capital goes into more detail in a piece we highly recommend reading to see more.

Observability

Businesses require comprehensive monitoring and logging of AI system activities to detect anomalies and potential security incidents in real-time. Continuous monitoring of AI models is essential for identifying drifts, biases, and other issues that could compromise security and reliability. A few of the startups here include

Lasso and Helicone offer monitoring solutions. Meanwhile, vendors like SuperAlign provide governance for all models and features in use, tracks compliance, and conducts risk assessments.

Lynxius focuses on evaluating and monitoring model performance, particularly for critical parameters like model drift and hallucinations.

Harmonic Security helps organizations track GenAI adoption and identify Shadow AI (unauthorized GenAI app usage). It prevents sensitive data leakage using a pre-trained "Data-Protection LLM." Their innovative approach engages directly with employees for remediation rather than simply alerting the security team.

Using Enterprise Browser Extensions To Manage Employee Use of AI

LayerX is an enterprise browser extension that seamlessly integrates with any browser, transforming it into a secure and manageable workspace without compromising user experience. LayerX provides continuous monitoring, risk analysis, and real-time enforcement on any event and user activity during browsing sessions. LayerX provides a wide range of security components for the enterprise, including GenAI Data protection.

Managing AI Usage

LayerX’s enterprise browser extension offers various protection levels tailored to AI usage. It can manage access to all GenAI tools (as well as all websites and browser extensions in general), control actions like copying and pasting, and enforce data input policies through a data policy engine that determines what data is permissible to enter. The extension can block actions, issue alerts, and warn users when certain thresholds are exceeded.

LayerX’s solutions help companies understand and manage how employees interact with AI. This includes tracking AI-related websites, preventing data leakage, managing ShadowAI usage, and offering insights in the event of an incident involving AI use on a browser. Additionally, it provides tools to manage identity rotation and enforce AI-related policies and protocols.

Core Features around AI Use

LayerX enhances web browsing security with robust encryption that secures all interactions, including those involving generative AI (GenAI). Its policy engine, using both machine learning and regex-based classification, prevents unauthorized data submissions and detects sensitive information.

The platform’s site-blocking capability identifies and disables risky browser extensions, including those mimicking ChatGPT, while still allowing safe usage. LayerX also auto-detects and classifies new GenAI websites, applying appropriate security measures.

Control over browser extensions extends to blocking malicious or GenAI-specific add-ons, protecting data within SaaS applications. Additionally, LayerX provides real-time user training, offering just-in-time guidance on secure GenAI usage.

LayerX supports all browsers in every mode, with real-time detection and blocking of unauthorized browser activities, ensuring comprehensive coverage and security.

Future Predictions / Key Takeaways

We remain excited about the possibility of what AI security will be in the future. Compared to other “bubbles,” AI security, while still nascent in terms of actual need, prioritization for the CISO, and long-term viability, is very much a clear “buy” vs build. So, we believe that there will be concentrated winners and a handful of losers. The latter will lead to heavy M&A, and we anticipate we’ll see some of this towards the second half of FY2025. Why the second half of FY2025? By then, we’ll have clarity into real-production (customer-facing) LLM applications and whether or not they’ll be widely used. Some predict or that AI will still be speculative when measuring the impact from productivity to costs.

On the consolidation front, you’ll expect to see familiar names such as PANW, Zscaler, Cisco, and other large incumbents. We believe that with the pace of innovation happening in LLMs and AIs—and with it AI security—larger incumbents will be propelled to buy several start-ups in the space that have the agility to keep up with the market need and, in addition to the talent. Here, we anticipate that teams will get acquired based on their reasonable AI/ML and security talent.

Next year will be telling; we’ll get a sense of how much AI actually goes into production. Between the board-level pressure of using AI to deploying it securely, it’s a tailwind that many companies are riding; call it “first base,” and to get to “second base,” AI security companies will need to develop the most frictionless products that don’t get in the way of production, ensure no latency is introduced, and importantly, give complete visibility into what is happening both across employees and applications.

We’re early, but things are becoming more real. As for security, what an exciting time – to be living through yet another sizeable tectonic shift, which it will unlock productivity and business while also introducing vulnerabilities, some of which are starting to become known, while others will be new entirely. We’re excited to see this space unfold and hopefully, revisit this in the years to come to see how much we got wrong and if we’re lucky, how much we got right.

Thank you for reading! Thank you to partners like Lasso and LayerX for supporting the Software Analyst research platform.

SUBSCRIBE FOR FUTURE REPORTS

I have more in-depth cybersecurity research reports on market categories and the leading vendors across Identity, network, Security Operations Center (SOC), cloud, and application security. If you’re a security leader evaluating solutions, please feel free to reach out.