Securing AI/LLMs in 2025: A Practical Guide To Securing & Deploying AI

A Detailed Product and Vendor Guide on How to Secure AI in 2025 for Practitioners and Security Leaders

The speed of recent developments in AI has been mind-blowing since the start of 2025. The primary barriers to widespread enterprise AI adoption involve balancing multiple considerations, including cost, risks of hallucinations, and security concerns. Enterprises are at a crossroads right now : deploy AI or fall behind. Cybersecurity has the opportunity to drive meaningful business results by helping companies deploy secure AI. This detailed report enables you to get there!

Actionable Summary

Outline / Key objectives of this report: In 2024, SACR wrote a deep dive on how enterprises could secure their AI. This report is version 2, incorporating key market insights from the past 8 months. This report features many vendors that can help secure AI in the enterprise context, meaning they can help provide AI governance, secure data, and protect models. This report helps readers uncover the following:

Understand the state of AI adoption and risks as of 2025.

Understand market categorization and vendor focus areas as of 2025.

Provide clear recommendations for securely deploying AI based on our CISO conversations.

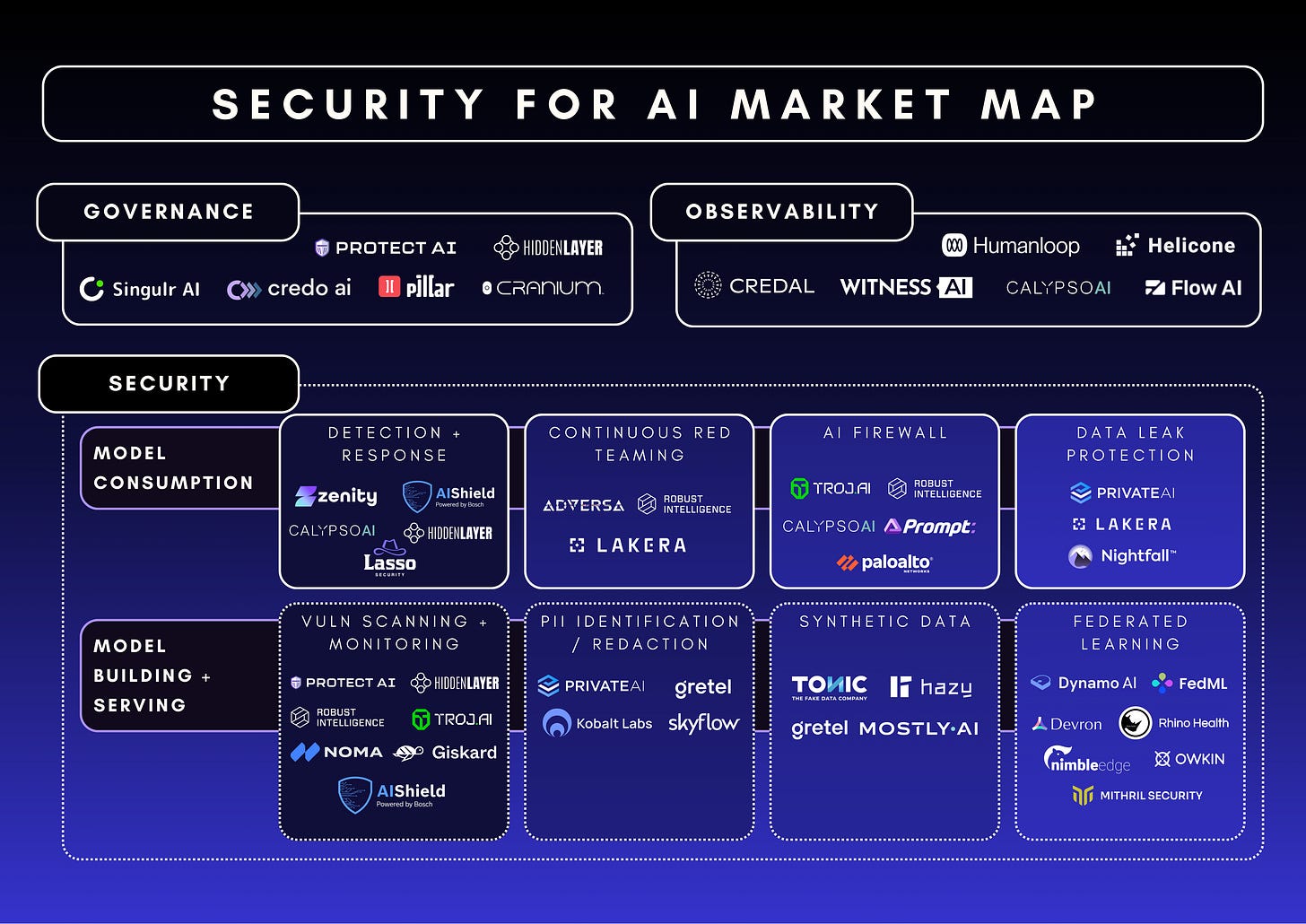

Security for AI Market solutions: Our research shows a significant overlap among vendor capabilities. Some vendors have significantly more capabilities, while others are relatively new entrants. Across the market, three core capabilities consistently emerged:

AI governance controls

Runtime security for AI

GenAI red teaming and penetration testing

AI Developments In 2025: Currently, the most prominent security risks for enterprises are related to DeepSeek. SACR’s research indicates that enterprise AI adoption continues to accelerate, significantly driven by advancements such as DeepSeek R1. Many organizations still favor managed AI services due to their simplicity of deployment. Although open-source solutions have made notable progress, most enterprises still prefer closed-source models. Nonetheless, there's a noticeable rise in organizations self-hosting models, driven by the need for complete data privacy and full control over the model lifecycle. This report helps practitioners understand how to secure AI and the market solutions currently on the market.

Recommendations for enterprises:

Data security controls: Organizations must prioritize foundational data security controls before deploying AI technologies.

AI preventative security & governance controls: Organizations should implement discovery and cataloging solutions for AI, specifically tracking location, usage purpose, and responsible stakeholders within the enterprise.

AI runtime security: This area presents the largest opportunity for improving enterprise AI security. We demonstrate the gaps and limitations of existing cybersecurity controls when addressing AI-specific risks and concerns.

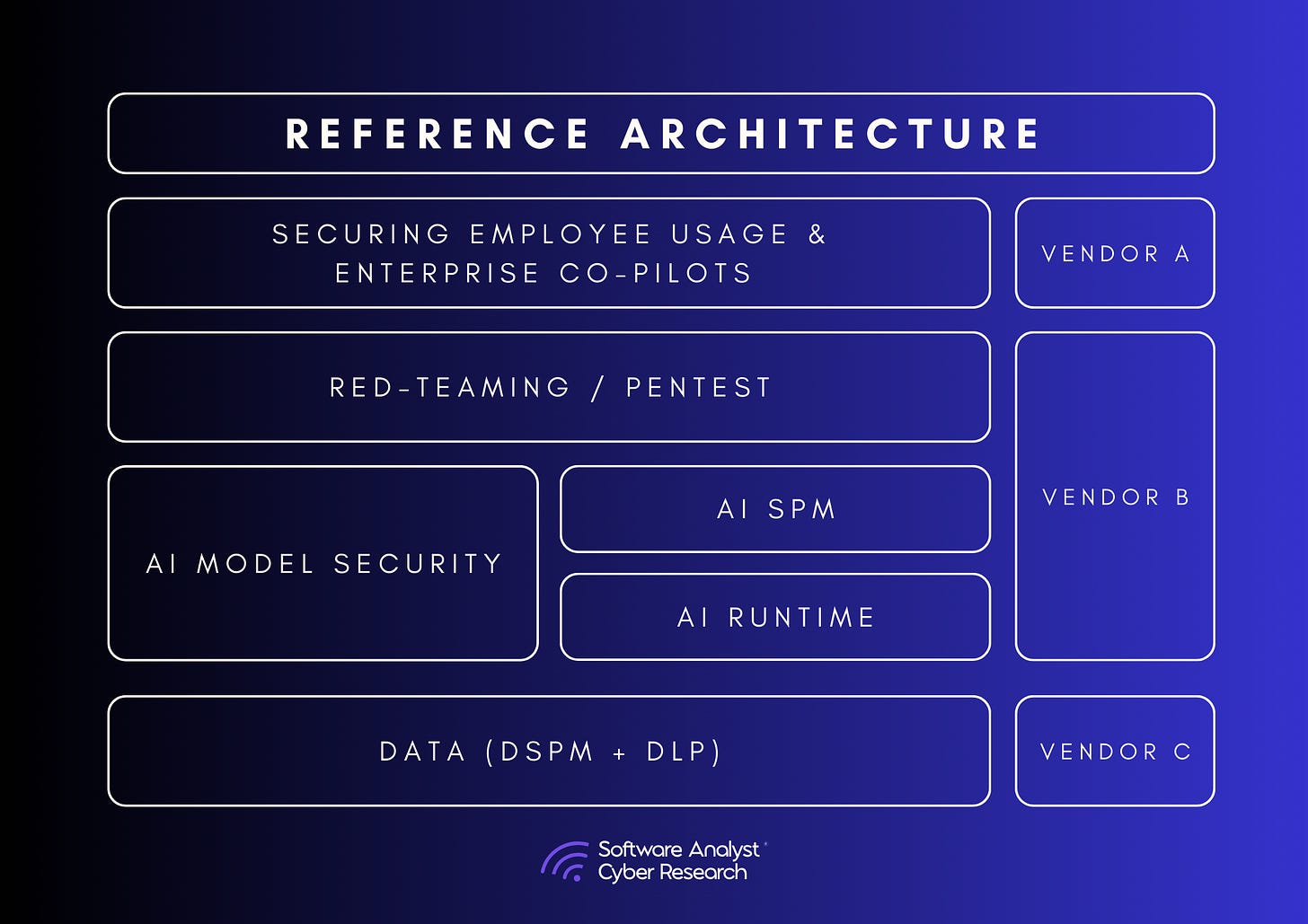

Market categorization: Based on our extensive work, SACR concluded that most vendors fall into two primary categories for securing AI:

Securing employee AI usage and enterprise agents

Securing the AI product and application model lifecycle

Illustrative Security for AI Market Categorization

Research Collaborators

Allie Howe is the Founder of Growth Cyber. Growth Cyber helps companies build secure and compliant AI. Allie also serves as a contributor to the OWASP Agentic Security Initiative, whose goal is to provide actionable guidance to the public on securing agentic AI.

Perspectives

Zain Rizavi, an investor at Ridge Ventures, for sharing his perspectives

CISOs and security leaders from a handful of companies

17+ vendors who actively contributed insights throughout the development of this research

Research partners

This report was developed in collaboration with nine representative vendors: Palo Alto Networks, Protect AI, HiddenLayer, Noma, Pillar, TrojAI, Prompt, Zenity, and Witness AI.

Structure of the report

State of Enterprise Adoption of AI In 2025

Enterprise Risks In Developing AI In 2025

Existing Cybersecurity Controls & Limitations

Market Solutions: How Organizations Should Secure AI

The Landscape for Security for AI

Detailed Breakdown For Vendor Market Offerings

Appendix of Market Solutions

We recommend opening the report on a browser for a better reading experience.

Introductory Blurb

AI has brought significant change and opportunity to enterprises. In 2023, AI emerged and some organizations became early adopters. In 2024, a significant increase in enterprise AI adoption occurred, but nothing previously observed will compare to the wave coming in 2025. Many companies have been hesitant to adopt AI due to security and privacy risks. However, in 2025, organizations that have not adopted AI, will no longer be able to remain competitive if they want to keep up with competitors. Organizations must now actively pursue AI adoption.

Enterprise Benefits of AI - Cost Savings & Spend: For many enterprises, the return on investment from adopting AI is primarily cost savings rather than new revenue opportunities. Enterprise spend on AI is expected to increase by around 5% in 2025. To put this in perspective, IBM states that companies plan to allocate an average of 3.32% of their revenue to AI—equivalent to $33.2 million annually for a $1 billion company.

Challenges of AI Adoption: Adopting AI, whether LLMs, GenAI, or AI Agents, is not without significant challenges. AI decision makers including CISOs, CIOs, VPs of Data, and VPs of Engineering cite concerns about data security, privacy, bias, and compliance. Several lawsuits have resulted in financial losses and reputation damage for companies that got AI implementations wrong. For example, an Air Canada chatbot gave a customer misleading information about bereavement fares and was later ordered to provide a refund to the customer. In another case, a South Korean startup leaked sensitive customer data in chats and was fined by the South Korean government approximately $93k. While these amounts can seem like “slap on the wrist” type of fines, it can be much worse. In February 2023, Google lost $100 billion in market value after its Bard AI chatbot shared inaccurate information.

One of the leading CISOs we spoke to:

“This is the first time security has become a business driver—a case where security actively supports business speed. Artificial Intelligence (AI) and Large Language Models (LLMs) are transforming industries, improving efficiency, and unlocking new business opportunities. However, rapid adoption presents significant security, compliance, and governance challenges. Organizations must balance AI innovation with risk management, ensuring regulatory alignment, customer trust, and board-level oversight. Without a structured security strategy, companies risk exposing sensitive data, enabling adversarial manipulation, and eroding stakeholder confidence. AI security is no longer solely an operational concern; it is a strategic imperative directly impacting enterprise risk, regulatory exposure, and long-term business viability.”

State of Enterprise Adoption of AI In 2025

Adoption of AI Rising into 2025 From 2024

As mentioned earlier in the report, the market for enterprises wishing to invest in AI is growing rapidly. The AI Global GenAI Security Readiness Report states that 42% of organizations are actively implementing LLMs across various functions, while another 45% are exploring AI implementation. Enterprises are adopting AI across multiple industries, with notable increases in adoption within legal, entertainment, healthcare, and financial services. According to Menlo Ventures’ 2024 State of Generative AI in the Enterprise report, AI spending for these industries ranged from $100 to $500 million. The report highlights other significant enterprise GenAI use cases including code generation, search and retrieval, summarization, and chatbots.

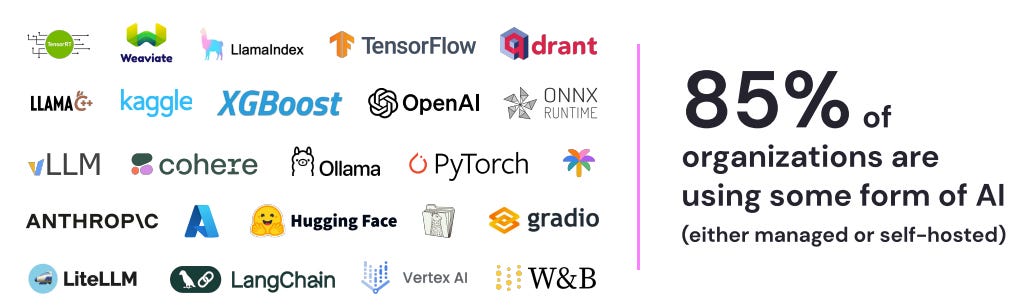

Across enterprises, AI adoption continues to grow, with over 85% of organizations now using either managed or self-hosted AI in their cloud environments, reflecting continued but stabilizing adoption rates. Managed AI services, in particular, are currently utilized by 74% of organizations, up from 70% in the previous year.

The Debate Over Open vs Closed Source Models

Enterprises started off using mostly closed-sourced models but are increasingly moving toward open-source options. A report by Andreessen Horowitz titled 16 Changes to the Way Enterprises Are Building and Buying Generative AI predicts that 41% of interviewed enterprises will increase their use of open-source models in their business in place of closed models. The report also predicts an additional 41% will switch from closed to open models if open-source models match closed-model performance.

Despite increased competition, OpenAI and Azure OpenAI SDKs continue to dominate, with more than half of organizations deploying them in their environments. PyTorch, ONNX Runtime, Hugging Face, and Tiktoken round out the top five AI tools used in the cloud.

The advantages of closed-source models explains why it remains the top choice. These benefits include vendor support, predictable release cycles, increased security due to dedicated teams’ restricted codebase access, and integration with vendor-provided infrastructure.

Open Source Continues To See Momentum

The advantages of open-source models include lower costs due to the absence of licensing fees, rapid innovation from a global community, customization, and transparency, potentially driving increased adoption among enterprises. Many of the most popular AI technologies are either open-source or tightly linked to open-source ecosystems. BERT remains the most widely used self-hosted model (rising to 74% adoption from 49%) among self-hosting organizations, while Mistral AI and Alibaba’s Qwen2 models have newly secured a place among the top models.

Open-source momentum fueled by DeepSeek’s cost savings

The interest in open source models has further intensified following disruptions caused by DeepSeek. DeepSeek achieved similar results as OpenAI’s O1. According to DeepSeek, DeepSeek-R1 scored 97.3% on the MATH-500 benchmark, slightly outperforming o1's at 96.4%. On Codeforces, a competitive coding benchmark, DeepSeek-R1 earns a rating of 2029 (96.3% percentile), while o1 scores slightly higher at 2061 (96.6% percentile).

While there are numerous security concerns surrounding DeepSeek-r1, its performance demonstrates that open-source models are highly competitive with closed-source models in terms of both performance and cost. Enterprises are unlikely to overlook the cost-saving opportunities presented by open-source models. Therefore, AI security vendors have a strategic opportunity to capitalize on this by addressing and mitigating security concerns associated with open-source model adoption.

Open vs closed source models predictions

Andreessen Horowitz predicts an even divide emerging in the use of open and closed models. This represents a significant shift from 2023 when market share was 80%–90% closed-source. Teams choosing closed-source typically adopt licensed models, paying fees and establishing agreements that restrict model providers from using ingested company data for training. Teams choosing open-source models must emphasize AI model lifecycle security, ensuring models produce expected outputs and are resistant to jailbreaks.

Cloud service providers (CSPs) can influence model choices

Andreessen Horowitz’s report indicates a high correlation between CSP selection and preferred AI models: Azure users generally preferred OpenAI, while Amazon users preferred Anthropic or Cohere. Their chart below shows that, of the 72% of enterprises using an API to access models, over half use models hosted by their CSP. This preference likely reflects enterprises' familiarity with their CSP as a data subprocessor and acceptance of associated risks.

Managed vs. self-hosted

While many companies rely on managed AI offerings—increasing from 70% to 74% in 2025—the report highlights a dramatic rise in self-hosted AI adoption, jumping from 42% to 75% year over year. This surge stems from both AI capabilities embedded in third-party software and organizations seeking greater control over their AI deployments. However, this shift toward self-hosted AI requires robust governance to protect cloud environments.

Security Implications

Organizations face numerous choices related to AI procurement and implementation. This extends to AI security procurement, where CISOs prioritize solutions which maintain data privacy by operating within existing architecture rather than relying on external third parties. Both CISOs and product executives seek configurable AI security products, allowing teams to customize detection settings for different projects. For instance, one project might require heightened sensitivity to PII leakage, while another requires enhanced monitoring of toxic responses.

The bottom-line is, AI and model security represent a rapidly evolving domain. A common theme among CISOs, CIOs, and product and engineering leaders is the demand for AI security products capable of rapid innovation to keep up with the evolving space. CISOs emphasize that actual AI threats may not become fully apparent for another 2-5 years; thus, it is critical for AI security products to continuously address emerging threats.

Enterprise Risks In Developing AI In 2025

Based on our research, there is no shortage of risks that organizations are facing coming into 2025 — specially given significant progress by Chinese companies like DeepSeek. These 3 frameworks have been the most popularly used to categorize and understand risks for companies.

Industry Frameworks for AI Threats

OWASP Top 10 for LLMs and Generative AI: CISOs rely on the OWASP Top 10 framework to identify and mitigate key AI threats. This framework highlights critical risks like prompt injection (#1) and excessive agency (#6), helping teams implement proper input validation and limit LLM permissions.

MITRE's ATLAS: MITRE's Adversarial Threat Landscape for AI Systems (ATLAS) framework provides a comprehensive matrix of potential AI threats and attack methods. The framework outlines scenarios like service denial and model poisoning. Other key resources include Google's Secure AI Framework, NIST's AI Risk Management Framework, and Databricks' AI Security Framework.

GenAI Attacks Matrix: A knowledge source matrix documenting TTPs (tactics, techniques, and procedures) used to target GenAI-based systems, copilots, and agents. Inspired by frameworks like MITRE ATT&CK, this matrix assists organizations in understanding security risks applicable to M365, containers, and SaaS.

More broadly, we would categorize the risks that enterprises face into two key areas:

Employee Activity and safeguarding AI usage

Securing the lifecycle of homegrown applications

Risks Around Securing AI Usage, Activity and Guardrails

In recent months, enterprises integrating AI tools like Microsoft Copilot, Google's Gemini, ServiceNow, and Salesforce have encountered significant security challenges. A primary concern is over-permissioning, where AI assistants access more data than necessary, leading to unintended exposure of sensitive information. For instance, Microsoft Copilot's deep integration with Microsoft 365 allows it to aggregate vast amounts of organizational data, potentially creating vulnerabilities if permissions aren't carefully managed.

The following represent prominent risks identified as of 2025, primarily focused around AI usage, data exposure, and policy violations:

Data Loss and Privacy Concerns: Organizations seek to better understand risks associated with unauthorized data exposure through AI systems. This is particularly critical for organizations in regulated sectors like finance and healthcare, where data breaches may result in significant regulatory penalties and reputational damage. In 2025, companies processing sensitive data through AI applications are actively evaluating and implementing robust safeguards to ensure compliance with HIPAA, GDPR, and other regulations.

Shadow AI Proliferation: A major challenge for enterprises in 2025 is the widespread adoption of unauthorized AI tools by employees. This issue is particularly relevant due to new Chinese AI tools employees are adopting informally. These unsanctioned AI applications, including widely-used chatbots and productivity tools, pose significant security risks as they operate outside organizational control. While LLM firewalls and other protective measures have emerged to address this issue, the rapidly expanding AI landscape makes comprehensive monitoring increasingly complex. Organizations must balance innovation with security through clearly defined AI usage policies and technical controls.

Securing AI Lifecycle of Building Homegrown Applications

Why Securing the AI Lifecycle Differs from Traditional Software Development (SDLC)

Securing the AI development lifecycle presents unique challenges that distinguish it from traditional software development. While conventional security measures like Static Application Security Testing (SAST), Dynamic Application Security Testing (DAST), and API security remain relevant, the AI pipeline introduces additional complexities and potential blind spots:

Expanded Attack Surface: Data scientists often utilize environments like Jupyter notebooks or MLOps platforms operating outside traditional Continuous Integration/Continuous Deployment (CI/CD) pipelines, creating potential security blind spots often overshadowing traditional code. AI systems face specific threats, including data poisoning and adversarial attacks, where malicious actors introduce corrupt or misleading data into training datasets, compromising model integrity.

Much More Complex AI Lifecycle Components: The AI development process encompasses distinct phases—data preparation, model training, deployment, and runtime monitoring. Each phase introduces unique vulnerabilities and potential misconfigurations, necessitating specialized security checkpoints.

Supply Chain Security and AI Bill of Materials (AI-BOM): AI applications often rely on vast amounts of data and incorporate open-source models or datasets. Without meticulous vetting, these components can introduce vulnerabilities, leading to compromised AI systems. Given the intricate dependencies—including scripts, notebooks, data pipelines, and pre-trained models—there's a growing need for an AI Bill of Materials (AI-BOM). This full inventory aids in identifying and managing potential security risks within the AI supply chain.

Without extensively diving deep, others include monitoring RAG access, governance and compliance issues and runtime security requirements.

Illustrative breakdown of the AI development lifecycle:

The AI development lifecycle introduces distinct security challenges requiring careful consideration.

It is important to outline clearly the core steps for building and developing AI models:

Data Collection & Preparation: Gather, clean, and preprocess data, ensuring quality and compliance. Split data into training, validation, and test sets while applying feature engineering.

Model Development & Training: Choose the right model type and framework, train it using optimization techniques, and fine-tune for accuracy. Address bias, fairness, and security risks like adversarial attacks.

Model Evaluation & Testing: Assess performance with key metrics, conduct adversarial testing, stress test for edge cases, and ensure explainability. Detect data poisoning, backdoors, and model drift.

Deployment & Continuous Monitoring: Deploy the model via API, cloud, or edge devices, implement real-time security monitoring, automate retraining through MLOps, and audit AI decisions for compliance and security.

Risks associated with each stage of building AI Apps

Data Loss: Enterprises face significant security risks when developing AI applications. Data leakage is particularly concerning when using models that connect to the internet or lack contractual protections against training on customer data. These risks aren't theoretical—they're happening now. David Bombal recently proved this point in a viral post where he used Wireshark to catch Deepseek sending data to China. This example illustrates why organizations must thoroughly examine model providers' privacy policies and data handling practices.

Vulnerable Models: MLSecOps is emerging as organizations recognize that AI builders operate differently from traditional software teams. Machine learning engineers and data scientists work in specialized environments like Databricks and Jupyter Notebooks rather than standard development pipelines. These environments require monitoring for misconfigurations and leaked secrets. MLSecOps includes scanning models for vulnerabilities and backdoors—analogous to scanning software dependencies for supply chain security. This practice is vital because attackers have demonstrated they can download models from repositories, modify them to transmit data to malicious servers, and redistribute them for unsuspecting users to download.

Data Poisoning: Data poisoning is another risk that can lead to backdoor insertion. Orca Security released an intentionally vulnerable AI infrastructure called AI Goat for AI security education and awareness. One vulnerability demonstrated by AI Goat is data poisoning, which involves uploading new or modifying existing data that the model uses to train on, to change the model. Data poisoning is closely related to model poisoning, a threat allowing attackers to insert backdoors into models. Backdoors can cause deceptive behavior, which is difficult to detect. Anthropic researchers brought this to light last year, in a paper titled, “Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training”.

Missing Machine Learning Expertise on Security Teams: Deceptive behavior introduced through machine learning represents an important focal point for AI Security. Emmanuel Guilherme, AI/LLM Security Researcher at OWASP speaks to the complexities of machine learning and it’s impact on AI Security in this AI Global GenAI Security Readiness Report. Guilherme states, “The biggest obstacle to securing AI systems is the significant visibility gap, especially when using third-party vendors. Understanding the complexities of the ML flow and adversarial ML nuances adds to this challenge. Building a strong cross-functional ML security team is difficult, requiring professionals from diverse backgrounds to create comprehensive security scenarios”. The complexities of machine learning and its significant role in AI application development has led to a new term, MLSecOps, which is focused on baking in security during machine learning workflows such as data pipelines and notebooks.

Prompt Attacks & Jailbreaks: At AWS re:Inforce 2024, Stephen Schmidt, Chief Security Officer at Amazon, highlighted that AI applications require continuous testing. LLMs change as users interact with them, not only when new versions are released. Traditional security approaches focusing solely on shifting scanning left—evaluating code pre-release—will not suffice. AI applications require continuous security scanning. During runtime, AI applications may encounter prompt attacks such as direct prompt injections, indirect prompt injections, and jailbreaks. These attacks can lead to unauthorized access, data loss, or harmful outputs.

Existing Cybersecurity Controls & Limitations

Organizations currently employ three primary methods to secure AI systems. There is no one-size-fits-all approach. Typically, organizations use 2/3 of the methodologies below:

Model providers and self-hosted solutions

Incumbent security controls and vendors

Implement pure-play AI security vendors

Model Providers and Self-hosted Solutions

The first includes AI vendors like OpenAI, Mistral, and Meta. These companies embed security, data privacy, and responsible AI protections into their models during training and inference. However, their primary focus remains on building the most advanced and efficient AI models rather than prioritizing security. Consequently, many corporations seek an independent security layer capable of protecting all models across various environments.

Self-hosted models: As discussed earlier, some organizations choose self-hosted models primarily for enhanced security and data privacy, as sensitive information never leaves their controlled infrastructure. This approach has proven effective in industries with strict regulatory requirements like finance, healthcare, and government. By self-hosting, companies maintain full control over model updates, fine-tuning, and security protocols, reducing reliance on external providers that may introduce vulnerabilities.

Third-party models: These pose security risks because data must be transmitted to external servers, increasing exposure to breaches, policy changes, and vendor lock-in. Vendors fully using these cloud-based models tend to deploy additional independent security measures.

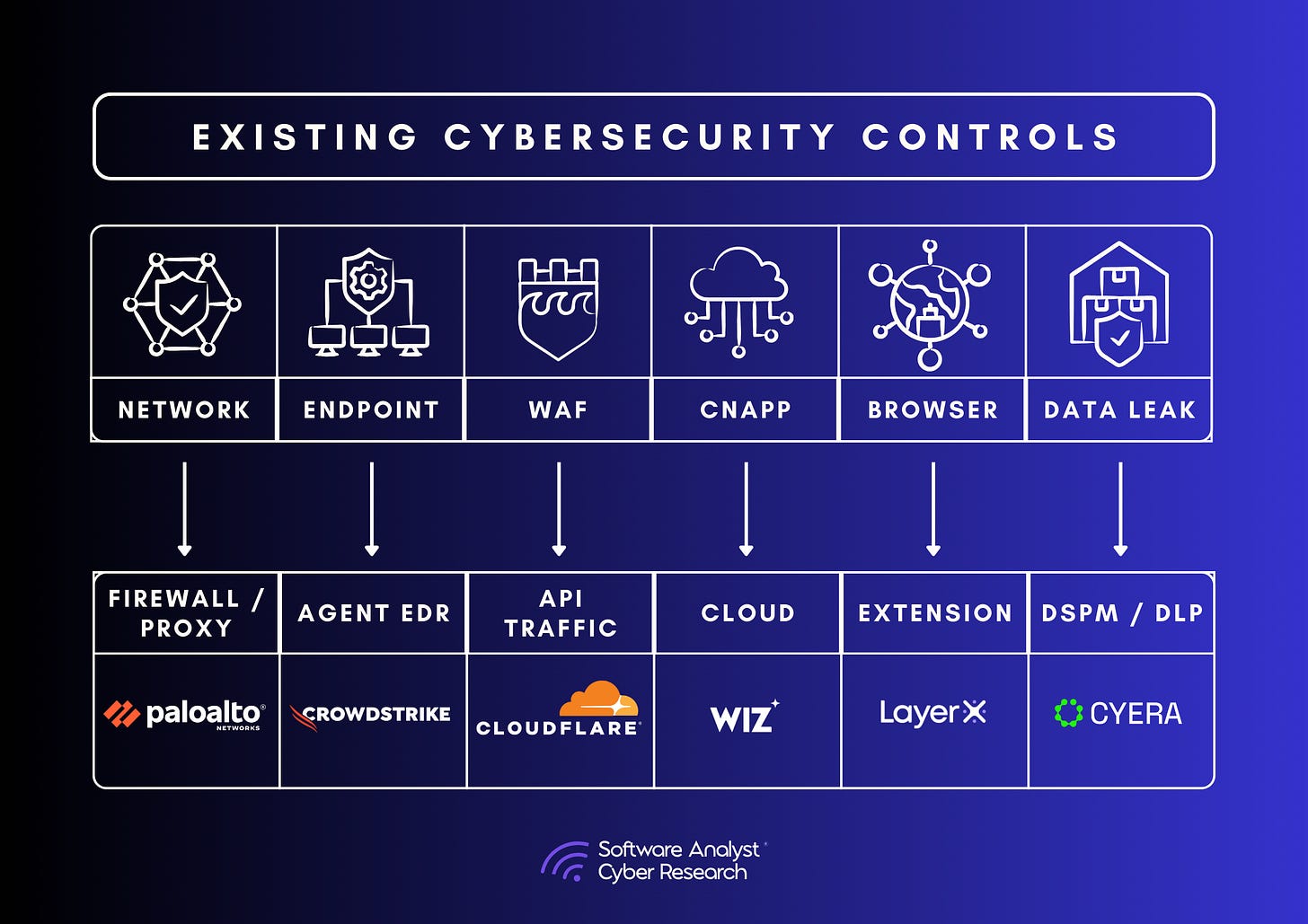

Incumbent Cybersecurity Controls

First of all, many companies already had some level of cybersecurity controls for protecting data, network and endpoints. Our research found that enterprises lacking dedicated AI security solutions typically rely on one or more of the existing controls below. However, as detailed below, each has strengths and limitations. It's essential to recognize both the strengths and limitations of these controls in the context of AI security.

Network Security

Firewalls / SASE: Traditional network security measures, including firewalls and intrusion detection systems, are essential to protect AI API endpoints and data traffic from unauthorized access and lateral movement. Some organizations implement AI-specific firewall rules to throttle high-risk API queries, such as rate-limiting LLM API requests to prevent model extraction. However, these measures often lack the capability to detect malicious AI prompts or protect against AI model manipulation, like backdoor insertions. Notably, vendors like Witness AI integrate with network security providers such as Palo Alto Networks to enforce AI-specific policies.

Web Application Firewalls (WAFs) can also rate-limit AI inference requests to prevent model scraping and mitigate automated prompt injection attacks. However, they lack AI-specific threat detection capabilities.

Cloud Access Security Brokers (CASBs) provide visibility and control over AI-related data flowing through cloud environments, making them highly effective for securing AI SaaS applications and preventing shadow AI deployments. However, CASBs do not protect AI models themselves, as they primarily focus on access governance and cloud data security.

Data Loss Prevention (DLP) & Data Security Posture Management (DSPM)

DLP and DSPM solutions are designed to protect sensitive information from unauthorized access or exfiltration. In AI systems, these tools help prevent accidental or intentional leaks of personally identifiable information (PII) or proprietary business data. However, they often fall short in addressing AI-specific threats, such as model exfiltration via API scraping or analyzing the intricacies of AI model logic. Additionally, without AI-aware policies, these tools may not effectively mitigate risks unique to large language models (LLMs), like prompt leakage.

Cloud Security (CNAPP & CSPM): Since AI models are frequently deployed on cloud platforms like AWS, GCP, or Azure, Cloud-Native Application Protection Platforms (CNAPP) and Cloud Security Posture Management (CSPM) tools identify misconfigurations. Many vendors have begun extending their capabilities to detect vulnerabilities specifically for cloud-centric AI models.

Endpoint Detection and Response (EDR): EDR solutions are effective in detecting malware and traditional endpoint threats. However, they do not typically monitor AI model threats, such as unauthorized inference attempts or API scraping activities aimed at extracting model functionalities.

Application Security Scanners (SAST/DAST): Static and Dynamic Application Security Testing tools are proficient at assessing traditional application vulnerabilities. Yet, they struggle to evaluate AI model behaviours or their resilience against adversarial attacks, though we’re seeing new vendors add controls.

Browser Security: While browser security measures can control the usage of AI chatbots, they do not contribute to securing proprietary AI models themselves. These controls are limited to user interactions and do not extend to the underlying AI infrastructure.

These established security vendors, originally focused on traditional areas, are beginning to detect some AI traffic and adjacencies. Some have started incorporating AI security features into their offerings. However, innovation in this area remains slow, and they have yet to deliver groundbreaking AI security capabilities. Additionally, because they cover a broad spectrum of security areas—including cloud, network, and endpoint security—AI security often becomes just one aspect of their portfolio rather than a dedicated focus.

Emerging Pure-Play Solutions

This third category comprises new entrants which are purpose-built to secure AI. Most of these companies focus on niche challenges, including securing AI use, protecting against prompt injection attacks, and identifying vulnerabilities in open-source LLMs. It is evident from the analysis, so far, that AI security is sorely needed, representing a large domain and array of problem sets in a rapidly evolving space.

Market Solutions: How Organizations Should Secure AI

This section is a guide to the existing market dynamics based on the market research conducted. Below is an overview of a typical enterprise architecture that requires securing, provided the organization is not fully reliant on closed-source models and vendor-hosted environments:

Data & Compute Layer (AI Foundations): Organizations must secure databases, data lakes, or data leveraged from services like AWS S3. Some may already have a vector database. Enterprises should implement robust data governance controls.

AI Model & Inference Layer (Securing AI Logic): Organizations typically have some combination of foundation models, fine-tuned models (open or closed-source), embeddings, inference endpoints, and agentic AI workflows, hosted either internally or on public clouds. Across these areas, enterprises must secure the entire lifecycle, from build to runtime.

AI Application & User Interface Layer (Securing AI Usage): Organizations often use some combination of AI copilots, autonomous agents, or chatbots. Enterprises must ensure secure prompts, access controls, and implement content filtering measures.

Across these layers and depending on the enterprise’s AI strategy, one or two specialized vendors should be deployed to comprehensively secure their AI stack along these areas.

SACR Recommendations for secure AI deployment

Data security controls

AI preventative security & risk controls

AI Runtime security

1) Data Security Controls for AI

A strong AI security strategy cannot exist without robust data security controls, as AI models are only as secure and trustworthy as the data they are trained on and interact with. AI systems introduce new risks related to data access, integrity, leakage, and governance, making data security a foundational element in AI deployment. In the AI Global GenAI Security Readiness Report, surveyed respondents (CISOs, business users, security analysts, and developers) identified data privacy and security as major barriers to AI adoption. According to Adam Duman, Information and Compliance Manager at Vanta, AI has a way of making existing security issues worse. If companies are building GenAI apps or AI Agents they’ll want to use their own data and will be thinking about trustworthiness, responsible outputs, and bias. For example, if a company does not have existing data governance in place such as policies on data tagging, these issues will become exaggerated with AI.

Organizations should focus on how vendors allow them to secure data at every stage before it gets leveraged by AI.

DSPM (Data Security Posture Management (DSPM): Organizations should leverage DSPM solutions that scan their environments to give them visibility into all data stores and, most importantly, help them identify data sets for AI that must comply with GDPR, CCPA, HIPAA, and emerging AI-specific regulations, to ensure ethical and lawful deployment. The reason is that AI systems require vast amounts of structured and unstructured data, but not all data should be accessible to AI models. Hence companies should use DSPM to discover sensitive data.

Role-based and attribute-based access controls (RBAC & ABAC): Organizations should use data security vendors that have RBAC and ABAC controls to ensure that AI models and employees can only access authorized datasets.

Data Loss Prevention (DLP): Companies should prevent sensitive data leakage into generative AI models, as employees may unintentionally input regulated or confidential information.

2) AI Preventative & Governance Controls

Organizations should adopt proactive measures to protect AI. These key components include:

Governance policies: Organizations must establish robust governance and security policies for the secure deployment of AI. Governance controls should include oversight of all AI usage within the organization, both known and unknown, as well as securing the build and deployment lifecycle of AI applications.

Discovery & catalogue solutions for AI within enterprises (Where, What & Who): Organizations should deploy solutions to identify and inventory all categories of AI within their enterprise. Enterprises cannot secure what they cannot see—this fundamental cybersecurity principle equally applies to AI. Organizations must understand where AI is utilized internally: are employees interacting with ChatGPT, Claude desktop, or downloading open-source models from HuggingFace? By mapping both approved and shadow AI usage, enterprises can effectively scan models, generate AI Bills of Materials (AI-BOMs) to verify model provenance, and monitor for data loss. Organizations using open-source models should scan all models, as different models offer varying degrees of protection and guardrails. Understanding model provenance through MLSecOps helps identify potential biases. Enterprises should also carefully review vendor privacy policies to determine where organizational data is transmitted. For instance, locally hosting DeepSeek may be preferable to mitigate data sovereignty risks associated with sending data internationally, such as to China.

Leverage an AI-Security Posture Management (SPM) solution: AI-SPM allows organizations to assess, monitor, and secure AI/LLM deployments through scanning, providing visibility, risk detection, policy enforcement, and compliance. AI-SPM protects AI models, APIs, and data flows from model-based attacks and data leakage. Leveraging elements of Cloud Security Posture Management (CSPM) and Data Security Posture Management (DSPM), AI-SPM ensures security teams have a real-time inventory of AI assets. AI-SPM should detect misconfigurations, data leakage, and risks to model integrity throughout the AI lifecycle. AI security companies in this category assist enterprises in understanding where AI is used and the connected tools.

Simulate your defence systems for AI models (Pre-deployment and Post-deployment): Gen-AI Penetration Testing and AI Red Teaming play a critical role in identifying vulnerabilities, testing resilience, and enhancing security across the AI model lifecycle. Their primary function is to simulate adversarial attacks and uncover security weaknesses in AI models before they are exploited in real-world scenarios. AI penetration testing and red teaming should be implemented as proactive controls, enforcing secure AI development practices, policy compliance, and risk assessments at compliance and governance levels. Organizations should also conduct red team simulations in live environments to detect model drift and operational risks.

To summarize this section, we’ll use the words from one of the leading CISOs we spoke to, during our research. He phrased preventative controls as

*To address these risks, organizations must integrate Zero Trust Architecture (ZTA) for AI, ensuring that only authenticated users, applications, and workflows can interact with AI systems (Cloud Security Alliance, 2025). AI data governance and compliance frameworks must also align with evolving EU AI Act and SEC Cyber Rules to provide transparency and board-level visibility into AI risk management (Ivanti, 2025). By embedding AI security-by-design, companies can meet regulatory expectations while preserving business agility and innovation.

For enterprises deploying AI at scale, proactive risk mitigation is essential. Implementing AI-Secure Bill of Materials (AI-SBOMs) strengthens supply chain security, while AI Red Teaming identifies vulnerabilities before exploitation (Perception Point, 2025). As AI regulations evolve, security leaders must invest in continuous monitoring, cyber risk quantification (CRQ), and alignment with industry Risk Management Frameworks (e.g., NIST, ISO/IEC, OECD) compliance to maintain resilience. The organizations that embed AI security into their core strategy—from development teams to the boardroom—will lead the next era of AI-driven business.

3) Runtime Security for AI

Enterprises must implement runtime security for AI models, providing real-time protection, monitoring, and response to threats during inference and active deployment. Runtime security should utilize detection and response agents, eBPF, or SDKs for real-time protection. Runtime represents a critical component of AI security. In their paper titled “Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training”, Anthropic researchers demonstrated how models with backdoors intentionally behave differently in training versus deployment. AI security vendors in this category identify models with hidden backdoors and monitor incoming prompt injections or jailbreak attempts. These solutions also detect repetitive API requests indicative of model theft or other suspicious activities. Vendors should additionally enforce real-time authentication for API-based AI services.

Observability is an extension of runtime security. The objective here is for enterprises to capture real-time logging and monitoring of Al activity like capturing inference requests, model responses and system logs for security analysis. It allows enterprises to track training progress and AI responses in real time. Observability serves two critical functions: it helps teams improve and debug AI chatbots, and it enables detecting harmful or unintended responses—allowing systems to throw exceptions and provide alternative responses when needed. AI Security vendors in this space help enterprises debug applications and evaluate responses effectively. The entire lifecycle from build through deployment requires continuous oversight. Due to AI's non-deterministic nature, even robust safety guardrails cannot guarantee consistent responses. These solutions should integrate with SIEM and SOAR systems for real-time incident response and analysis.

Market Landscape For Securing AI

The AI security landscape is vast, encompassing numerous challenges. The space can be divided into two primary categories:

1) Securing Employee AI Usage: Protecting How Employees Interact with AI

This first category focuses on safeguarding enterprises from risks associated with employee interaction with AI tools, such as generative AI applications (e.g., ChatGPT, Claude, Copilot). Vendors in this category primarily focus on preventing data leakage, unauthorized access, and compliance violations by monitoring AI usage, applying security policies, content moderation, and enforcing AI-specific data loss prevention (DLP). The goal is to prevent employees from exposing sensitive information to AI models or using unauthorized AI tools.

AI security vendors in this category offer both out-of-the-box and customizable policies governing data allowed to be transmitted through AI applications. This approach, sometimes described as an “AI firewall,” allows vendors to act as a proxy on data passed to AI via APIs, browsers, or desktop tools.

These vendors also enable governance controls to ensure organizational policies are enforced on data, AI apps train on. They implement access controls (RBAC) to minimize oversharing and undersharing of information across the organization, classify for intent or catalogue how employees are using models.

2) Securing AI Models: Protecting the AI Development Lifecycle

This second category focuses on securing the AI development lifecycle, ensuring AI models are securely built, deployed, and monitored, particularly for homegrown applications. It includes protecting datasets, training pipelines, and models from tampering, adversarial attacks, and bias risks. Vendors in this space emphasize AI supply chain security, adversarial ML defences, model watermarking, and runtime monitoring to detect vulnerabilities in AI applications. These solutions are critical for organizations deploying proprietary AI models, ensuring outputs remain trustworthy, secure, and compliant with regulatory frameworks. Within this category, vendors have found success in addressing two subcategories:

Model lifecycle: These vendors secure AI applications from build through runtime, focusing on protecting AI models, applications, and underlying infrastructure throughout their lifecycle.

AI application security vendors: These players enhance traditional security approaches by addressing AI-specific concerns like non-deterministic behavior and specialized development practices, e.g. MLSecOps (Machine Learning Security Operations).

Vendor Product Offerings Overview

Securing AI Product Lifecycle

Palo Alto Networks

AI Access (GenAI apps activity through the network)

AI SPM (Visibility over custom apps-CSPM/DSPM)

AI Runtime (Apps/Agents In prod)

Protect AI

Guardian (Scanning solution in ML artifact)

Recon (AI red teaming)

Layer (AI Detection and Response)

HiddenLayer

HL Model Scanner (through supply chain / posture)

HL AI Detection & Response (eBPF agent; Gen AI Apps)

HL Automated red teaming and adversarial testing

Noma

Noma Testing & Red Teaming

AI SPM

AI Runtime protection

Data & AI Supply Chain

Pillar Security

AI Discovery (AI Asset Identification)

AI SPM (AI Asset Scanning & Visualization)

AI Red Teaming (AI Asset Testing)

Adaptive Guardrails (Runtime Protection)

AI Telemetry (Monitor and Govern)

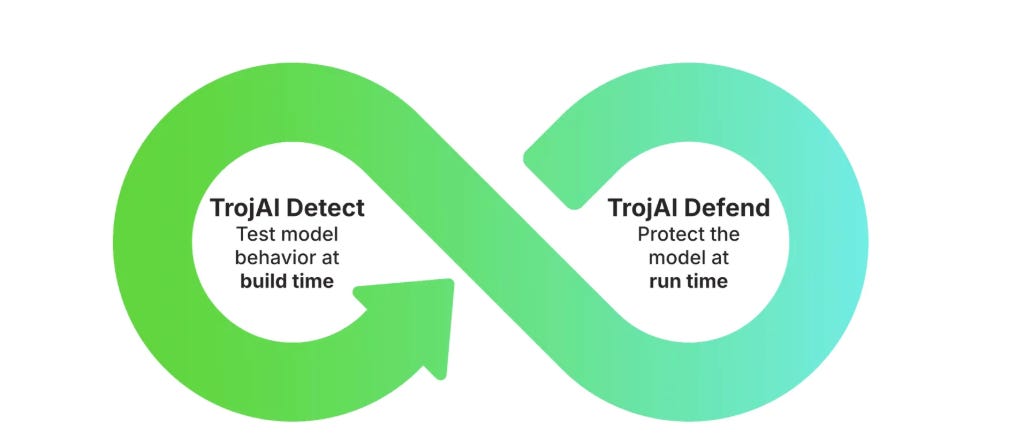

TrojAI

TrojAI Detect (pentest / red teaming)

TrojAI Defend (runtime)

Securing AI Usage & Enterprise Co-pilots

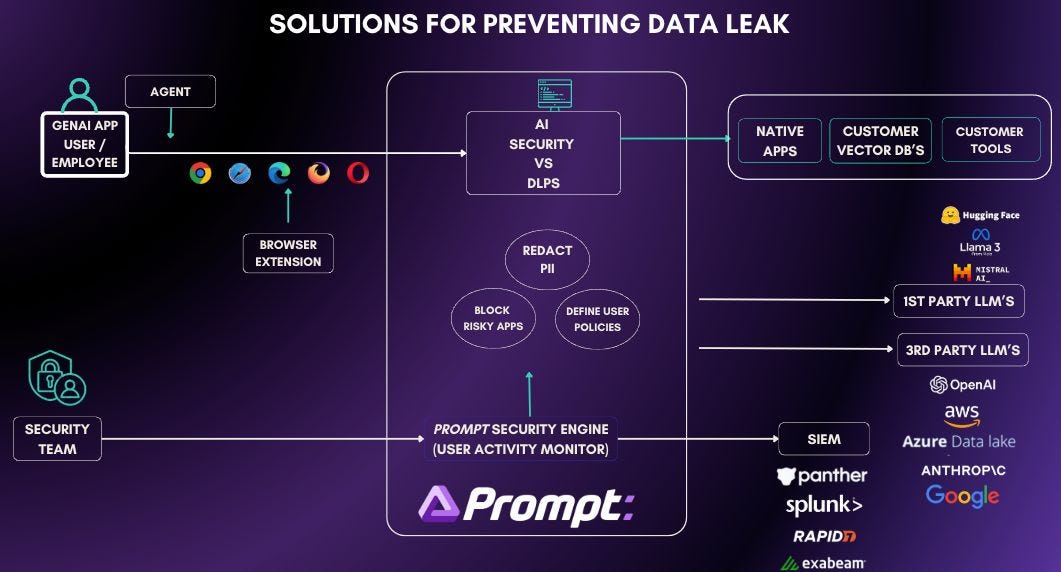

Prompt Security

Prompt for Employees

Prompt for AI Code Assistants

Prompt for Homegrown GenAI Applications

Zenity

Zenity AI-SPM

Zenity Detection and Response

Zenity Monitoring and Profiling

Witness AI

Observe module (network proxies - GenAI usage)

Control module (policy mgmt / intent-based)

Protect module (front-facing interactions/guardrails/data leakage)

Detailed Illustrative Breakdown of Key Market Vendors

A) Securing AI Product Lifecycle :

Palo Alto Networks AI Security

Palo Alto Networks offers customers its security for AI products integrated with the same platforms many enterprises already rely on for network security, cloud security, or SOC. They offer these capabilities through their Strata Network Security Platform - including their Prisma SASE solution - or Cortex Cloud to help customers secure their AI applications and models, as well as employee use of AI.

The overarching philosophy is to deliver holistic AI/LLM protection without making organizations deploy multiple new products or inline agents. This can help companies protect everything from employee use of generative AI services to their own homegrown AI applications—all with a comprehensive platform approach.

Core AI Products

AI Access Security

Palo Alto Networks AI Access Security focuses on discovering, controlling, monitoring and safeguarding employees’ usage of GenAI applications. Through their Next-Gen Firewall (NGFW) and/or Prisma Access solution, AI Access Security automatically identifies which GenAI apps are in use, by whom, and for what purpose–ensuring there are no blind spots. It provides bespoke risk scores to help make data-driven decisions about GenAI apps, and enables granular controls to prevent sensitive data from being leaked into GenAI apps and their training models. What’s more, it protects end users from malicious links and malware that may be hidden within GenAI responses. A core differentiator Palo Alto Networks has over emerging competitors is its comprehensive catalog of varying kinds of ~1,800 GenAI apps and LLM-powered data classification to keep sensitive data from inadvertently being shared with public AI models.

AI Security Posture Management (AI-SPM)

AI Security Posture Management (AI-SPM) gives visibility and control over the three critical components of custom AI applications organizations have developed:

The data used for training or inference,

The integrity of AI models,

Access to deployed models.

By building on Palo Alto Networks’ CSPM and DSPM , AI-SPM discovers, inventories, and continuously monitors AI workloads. This includes identifying the data sources and models AI apps leverage, the frameworks or managed AI services being used, and key misconfigurations or compliance risks. Because it relies on a unified view of cloud assets, AI-SPM can streamline how organizations track both traditional workloads and newly built AI/LLM applications in one place. The solution helps security teams detect unsafe or unauthorized model usage, reduce risk of data exposure from AI systems, and ensure compliance with current and upcoming regulations.

AI Runtime Security

Palo Alto Networks’s AI Runtime Security is built to secure enterprise AI applications and agents in production. The company provides inline defenses through its network or an API-based approach. AI Runtime Security detects and blocks malicious traffic—such as prompt-injection attempts, exploits aimed at AI models, or unauthorized data exfiltration. It leverages Palo Alto Networks threat detection (malware scanning, URL filtering) and data leak prevention features to safeguard AI-specific applications and agents. Rather than relying solely on post-event detection, the solution is designed for real-time, in-line prevention to thwart threats before they reach the underlying model. Using the AI Runtime Security API, Palo Alto Networks currently supports the use of its threat detection and prevention capabilities for AI agents built on low-code / no-code platforms such as Microsoft CoPilot Studio and Voiceflow. Additionally, Palo Alto Networks led the research, identification, and definition of the first-ever OWASP Top 10 for LLM Apps & Gen AI Agentic Security Initiative.

Overall assessment

Palo Alto Networks’ AI security portfolio aims to deliver a wide range of capabilities—spanning from employee usage governance to enterprise AI runtime threats. An important advantage is that their AI security features are delivered in-line with their established products: Palo Alto Networks’ firewall (hardware, virtual, or cloud form factor) and Prisma Access, augmented with visibility into risks across AI workloads through Cortex Cloud. This means organizations with a current Palo Alto Networks setup can often enable AI protections with minimal additional operational complexity. They have a unique advantage of implementing data governance controls leveraging their data security capabilities (100+ classifiers around PCI, PII, GDPR) together with their underlying threat intel and DLP engine that is applied to all AI traffic. This allows enterprises to maintain consistent coverage and policy enforcement across all types of AI applications and employee usage.

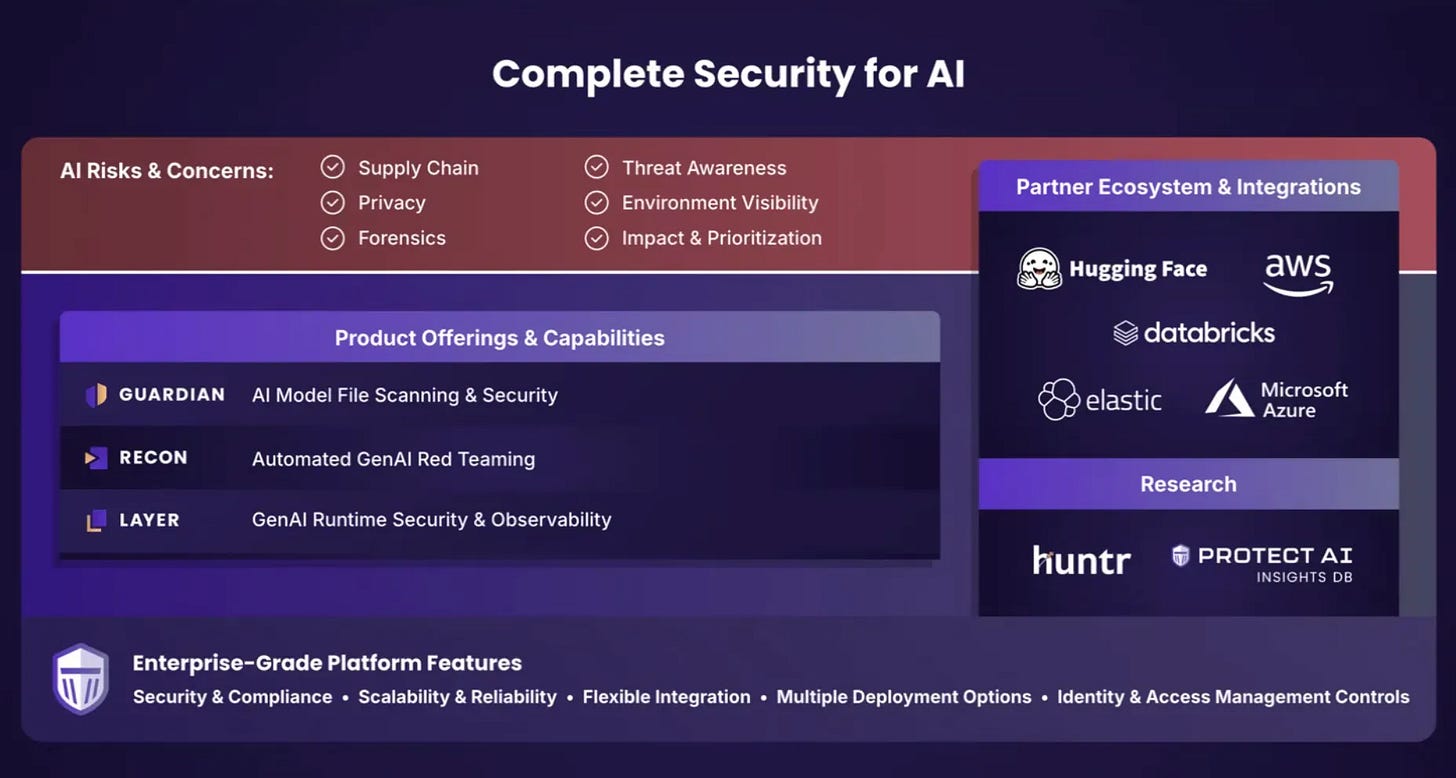

Protect AI

Protect AI is a Series B startup based out of Seattle, WA founded by Ian Swanson, Daryan Dehghanpisheh, and Badar Ahmed. Protect AI was founded in 2022 and to date has raised an impressive total of $108.5M with a variety of government partners across national security organizations and Fortune 500s. We consider them to be advanced in product capabilities and market traction.

The founders come from impressive and highly technical backgrounds at companies like AWS and Oracle. They have spent their careers building AI solutions as well as leading AI initiatives at AWS AI/ML services worldwide.

Protect AI positions its solution as a comprehensive, full lifecycle security platform for AI applications. The product is designed to secure AI “from the supply chain to runtime,” underscoring the company’s belief that enterprises should not adopt AI without stringent security controls in place.

Protect AI’s AI Security solution focuses on the entire AI lifecycle from build to runtime and consists of three main products: Guardian, Recon, and Layer.

Guardian is an enterprise-grade model scanning solution designed to scan the machine learning model artifact—the “engine” of AI applications—prior to its deployment. They have performed as much as over 3.2 million scans in a day. It helps spot serialization attacks, backdoors, and unsafe variants. This product comes with out-of-the-box policies companies can use to accept or reject models based on where it comes from, malicious code, safe storage formats, and licensing. Guardian supports PyTorch, TensorFlow, XGBoost, Keras, and other popular frameworks. Guardian integrates with Protect AI’s comprehensive platform that enables end to end visibility of AI and ML risks.

Recon is an AI red teaming product that tests AI applications against prompt injections, jailbreaks, data leakage and more. It helps organizations simulate different adversarial attacks. Recon leverages a robust library of over 400 attack scenarios, with ongoing additions to address evolving threat vectors that Protect AI has researched to date and is continuously updated with new attacks. Recon is base model agnostic and comes out-of-the-box with a library of 20K+ known vulnerabilities that can be used to attack GenAI systems for both safety and security. Recon can also be used to create custom attack scenarios specific to a business or industry.

Layer is an AI detection and response solution that monitors AI applications in production. It focuses on the security of live inference processes. It can enforce over 30 policies (with a demo showing 23) covering areas like Unicode manipulation, leakage of personally identifiable information, and other runtime risks. It can spot unicode, gibberish, and PII which can help catch jailbreaks and sensitive data loss. Layer uses small proprietary models to deliver on high benchmarks and high speed. Layer supports out of the box and custom policies that companies can use to block, monitor, or notify a user of certain activity. Notably, Layer goes beyond AI inputs and outputs by understanding what LLMs are connected to within an application. This approach captures threats across the entire system, going beyond what a simple AI firewall can provide. Layer achieves its detection functionally via an eBPF monitoring agent. This allows Layer to capture every session and interaction including API calls, system calls, and database queries, which allows for deeper insights and broader security coverage. However, it provides flexible integration options (SDK, agentless, eBPF-based) that facilitate low latency, cost-efficient, and highly accurate monitoring.

They have built an impressive partner program and have used these partnerships to deliver IP flywheels. For example, they have partnered with HuggingFace to scan the models available on their model hub. This creates value for HuggingFace so that their users can be educated on the vulnerabilities that are present in a model before downloading it. This also provides great value for Protect AI. This partnership allows Protect AI to scan over one million models which helps to create an IP flywheel on the latest findings and threats related to models.

Protect AI has built a large threat research community. The platform leverages a sizable threat research community (approximately 17,000 researchers) that contributes to its vulnerability intelligence. This crowdsourced model of innovation enhances the platform’s ability to stay ahead of emerging threats. Huntr allows researchers to submit model findings and get rewarded for previously unreported vulnerabilities. We believe there are flywheel effects that can serve as an avenue to fuel the technical acceleration of their product in the future.

Overall, as an analyst, our perspective is that we were impressed with Protect AI’s product suite, partnerships, roadmap and its capabilities in securing the entire AI lifecycle. As mentioned, Protect is further along in this market. The company has made some strategic and targeted acquisitions that have helped to round out its product suite across Guardian, Recon and Layer. The company’s deep domain expertise, combined with strategic integrations and a robust threat research community, positions it strongly against competitors. However, as with any complex security solution, its long-term success will hinge on its ability to scale efficiently, continuously update its threat intelligence, and navigate the evolving regulatory landscape.

HiddenLayer

HiddenLayer is a Series A startup based in Austin, Texas, founded by Chris "Tito" Sestito, Jim Ballard, and Tanner Burns in March 2022. The company has already achieved significant success based on the caliber of its customers and amounts raised. HiddenLayer has found tremendous success within the federal government, demonstrating the trustworthiness and credibility of its products. Additionally, it has one of the most robust educational research libraries in the market.

Platform Architecture and Core Components

Model Scanner: Their platform begins with a model scanning capability that assesses the security posture of AI models throughout the supply chain. It scans the model’s layers, components, and tensors for a range of vulnerabilities, including hidden backdoors, adversarial attack vectors, and supply chain risks. Recent research from HiddenLayer states that 97% of companies use pre-trained public repositories models. In traditional SDLCs, SCA solutions are used to scan software dependencies—models should be treated no differently, and companies are increasingly recognizing this.

A notable feature is its centralized repository, which tracks model origin, composition, and security status. This registry aids in policy enforcement and compliance reporting, similar to traditional application registries but tailored for AI assets. This is important because backdoors can be placed inside a model through a variety of methods, such as data poisoning. As the demand for model security solutions grows, HiddenLayer’s posture management functionality ties together the various security components to provide an overarching view of an organization’s AI security posture. It supports risk assessments and generates detailed reports that can be integrated with Governance, Risk, and Compliance (GRC) tools.

AI Detection and Response (AIDR): We’re particularly impressed with their real-time detection and response solution enabling organizations to monitor, block, and remediate potentially malicious interactions with AI systems. Specifically, HiddenLayer deploys an agent that leverages techniques such as eBPF to inspect runtime interactions—this agent monitors both the inputs and outputs of AI models to detect and block anomalous behaviors. For gen AI apps, the approach can function either as a proxy or through API integration, while for predictive models, the solution integrates more directly with the application code to assess security posture.

Automated Red Teaming and Adversarial Testing: The platform includes automated red teaming tools that simulate a wide range of adversarial attacks on both generative and predictive models. Red teaming is integrated into build and deployment pipelines, allowing continuous security assessments as models evolve. Their red teaming solution was developed against the OWASP Top 10 for LLMs, and almost all of the threats outlined in the Top 10 are supported by HiddenLayer. These threats are the culmination of months of research and various professional perspectives on the biggest threats to AI. A nice feature of the red teaming solution is that scans can be scheduled to be recurring and can also be done as needed against specific attacks. The attacks can test the entire AI system via APIs. Since HiddenLayer’s platform is well integrated, whatever weaknesses are found by the red teaming exercises can be used to influence their AI detection and response tool.

HiddenLayer is mainly focused on developing all components of their products internally as opposed to acquisitions. HiddenLayer has built a large research team that has published 40+ CVEs, 60+ disclosed vulnerabilities, and filed 10 patents. They were one of the first to come out with research on DeepSeek, where they discovered that DeepSeek-R1's responses to questions varied depending on the question's language. Additionally, they found that DeepSeek was susceptible to well-known and older jailbreaks like DAN (do anything now).

HiddenLayer has a comprehensive AI Security platform that secures AI applications from build to runtime. They have not made any acquisitions, which they believe makes their platform feel more cohesive than others on the market. Their platform integrates with typical MLOps workflows and meets machine learning engineers where they are, such as their integration with Databricks, for example.

Although initially adopted by federal and DOD organizations, the platform’s capabilities are increasingly attractive to large enterprises in the financial services, manufacturing, and healthcare sectors. Its holistic compliance, reporting, and integration features are particularly relevant for highly regulated environments.

From an analyst perspective, we believe HiddenLayer’s platform is well-positioned in the emerging AI security market particularly because the company has built a steady customer base with some of the most stringent government institutions, lending validity to its products. For organizations evaluating AI security solutions, HiddenLayer offers a compelling proposition across proactive defense, operational integration into build pipelines, and compliance readiness for organizations wanting to meet regulatory requirements.

Noma

Noma is a Series A startup based out of Tel Aviv, Israel founded by Alon Tron and Niv Braun. Despite only being founded in 2023, they have found tremendous success, including signing some big enterprise customers such as Fortune 100s and 500s . The founders have a formidable security background with experiences at Unit 8200, the elite cyber unit of the Israeli Defense Forces and at companies maximizing business opportunity using AI.

Noma’s leadership understands that traditional application security tools and practices, designed for the conventional software development lifecycle (SDLC), fall short when applied to AI. As highlighted in recent discussions, AI’s lifecycle extends far beyond what is typically captured by traditional SDLC models. Data pipelines, for example, are central to training and building models and often reside in environments like Jupyter notebooks, Databricks, or AWS SageMaker. Most of the work is done outside the CI/CD pipeline, which creates significant blind spots that must be addressed through broader security measures. This shift is critical, especially as the demand for AI and machine learning engineers grows, while traditional software engineer roles are becoming less central. This puts companies like Noma in a position to capitalize on a growing opportunity. The other reason the traditional SDLC does not match the AI SDLC is because AI applications change, even after deploys are made. AI applications continuously change since models can change as users interact with them. This calls for an increased focus on the right side of the SDLC, where traditionally the focus has been on shifting left. Ultimately, security for the AI SDLC needs to be left, right, and center.

Noma’s security solution covers build all the way to runtime for AI applications.

AI applications are dynamic—models evolve continuously as users interact with them, and misconfigurations or vulnerabilities can emerge at any point. In this context, security must be proactive and continuous. Noma addresses this need by securing every stage of the AI SDLC:

Noma’s solution secures every stage of the AI lifecycle:

Build and Integration:

Noma’s platform integrates with a wide array of tools—from version control systems to notebooks, data pipelines, and model registries—providing comprehensive visibility into AI assets. By scanning notebooks and data pipelines for misconfigurations, exposed secrets, and vulnerabilities, the platform addresses areas traditionally overlooked by conventional security measures.Testing and Red Teaming:

In addition to static scanning, Noma combines red teaming with dynamic testing based on established frameworks such as the OWASP Top 10 LLM and MITRE ATLAS. This dual approach identifies vulnerabilities and provides clear, actionable recommendations, ensuring that security risks are addressed proactively in a constantly evolving AI environment.Runtime Protection:

Once an AI application is deployed, continuous protection is essential. Noma’s runtime solution monitors adversarial threats—including prompt injection, model jailbreaks, and sensitive data leakage—in real time. Configurable safety guardrails prevent harmful outputs and off-topic responses, ensuring that the deployed applications remain secure and compliant.

Noma connects traditional security practices to AI-specific requirements by using familiar terminology while introducing new concepts, ensuring stakeholders understand the importance of securing the entire AI lifecycle.

Its platform integrates seamlessly with version control systems, notebooks, data pipelines, and model registries, scanning for misconfigurations, exposed secrets, vulnerabilities, and model license compliance issues. Noma also helps teams govern training data usage and manage RAG access to prevent data misuse. To continuously test for vulnerabilities, Noma provides a red teaming solution that blends static scans against a proprietary database with dynamic assessments guided by frameworks like the OWASP Top 10 LLM and MITRE ATLAS.

Once in production, Noma’s runtime protection monitors for AI adversarial threats such as prompt injection, model jailbreaks, and sensitive data leakage. It also supplies configurable safety guardrails designed to block harmful or off-topic outputs. By offering comprehensive coverage from build to runtime, Noma positions itself as a leader in the AI security market.

Pillar Security

Pillar Security is an early-stage pioneering startup founded by Dor Sarig and Ziv Karliner in 2023. The Tel Aviv based company is focused on developing a comprehensive AI Security platform to protect AI applications and agentic systems throughout the entire AI lifecycle. The founders bring extensive cybersecurity expertise to Pillar, with previous roles at Perimeter 81 and Aqua Security and work in the Israeli government & intelligence forces. Pillar Security has quickly gained traction, offering solutions such as AI asset discovery and catalog, runtime guardrails, automated red teaming, and proactive threat mitigation capabilities. The company's platform is designed to be model-agnostic and supports both self-hosted and cloud deployments. Their risk engines are constantly optimized by analyzing real-world AI app interactions and extensive AI threat research.

Pillar's customer base ranges from Fortune 500 companies to AI vertical startups across sectors like healthcare, finance, and technology.

Pillar is an end to end platform that secures AI applications from build to runtime. They break down the stages across build to runtime as plan, code, build, test, deploy, operate, and monitor.

During the plan phase Pillar provides an AI Workbench where teams can test models in a safe environment by analyzing security boundaries, experimenting with defensive configurations, and simulating real-world attack scenarios. The tool can help you generate additional test scenarios beyond the one you’ve provided and also test it out when guardrails and a system prompt is applied to the model.

During the code phase their AI Discovery tools help identify AI assets in code/no-code, AI/ML & data platforms. Pillar recognizes that traditional code scanners and DevSecOps tools exist outside of machine learning pipelines where models are forged. AI Discovery integrates with SCM, MLOps, and data platforms, to continuously identify and catalog all AI/ML models, meta-prompts, datasets, pipelines and notebooks. AI Discovery maintains a real-time inventory of all AI assets, which can be used by governance and risk teams.

In the build phase, the AI-SPM product takes center stage by conducting deep risk analysis of identified AI assets and intelligently understanding and graphing out the landscape of AI assets and their interconnections. Many have called 2025 the year of Agents. The hype train surrounding agents is due to the fact that the total addressable market for AI Agents compared to traditional SaaS is three orders of magnitude larger. In his blog post “Agentic AI's Intersection with Cybersecurity”, Chris Hughes, President of Aquia, articulates this opportunity by sharing, “advanced agents could disrupt the $400 billion software market and eat into the $10 trillion U.S. services economy”. Pillar engines utilize both code and runtime behavior to create detailed visual representations of Agentic AI systems and their potential attack surfaces in addition to identifying supply chain and pipeline risks such as model and data poisoning or sensitive data exposure. These insights help teams prioritize threats and understand the complex security posture of their AI projects, making the technology especially relevant in what many call the year of Agents.

To test AI systems, Pillar provides an AI red teaming solution. Using this Pillar can test AI systems against OWASP top 10 risks such as prompt injection, jailbreaks, sensitive data leak and custom business context specific scenarios. Since Pillar knows what AI Agents or AI systems are connected to via the AI-SPM tool, Pillar can test that permissions boundaries within the system are respected, which can be seen as a differentiator. The ability to test custom business scenarios is notable as well. Cybersecurity is usually a secondary market, meaning company funds are usually first dedicated to engineering and GTM efforts that help a new startup find product market fit. Spend on cybersecurity has historically come later, but given the high risks related to AI Security and the cross over between business risk and security risk companies are spending on security earlier. High level buyers like CEOs will be just as eager to see a tool help them test that AI systems output results relevant to their specific business (e.g. if you’re Ford don’t recommend Tesla) as well as help them test that AI systems are resistant to top threats like jailbreaks.

This concept is seen again during the deploy and operate phases Pillar outlines. Their runtime solution, Adaptive Guardrails, uses a data classification and risk detection engines that enforces policies aligned with your code of conduct to make sure inputs and outputs are what they should be. The guardrails also evolve overtime based on application-specific red teaming insights and real-world usage patterns. It does this by learning from both automated and manual red teaming exercises. That’s one of the advantages of Pillar and other AI Lifecycle platforms, that functionality or insights from one part of the platform can bring additional value to another.

Also during the operate phase, Pillar provides a sandbox for AI Agents to call tools that they use. Excessive Agency is number 6 on the OWASP Top Ten for LLMs and is related to Agents having too much functionality, excessive permissions, or excessive tool access. Agents that call tools and have excessive permissions could easily perform an unwarranted and unexpected action. Pillar’s sandbox can help make sure agents are taking the correct actions. If they don’t the sandbox provides a log of activities for forensic purposes.

Lastly, Pillar’s platform can help monitor and govern AI applications. Pillar captures AI inputs and outputs as well as agent workflows and tool calls. Their monitoring solution provides traceability while providing advanced search filters and analytics to identify unusual behaviors.

Pillar’s platform is a comprehensive solution for teams looking to secure the entire AI Lifecycle from build to runtime.

TrojAI

TrojAI is a seed-stage startup based in Saint John, New Brunswick, Canada, founded by James Stewart and Stephen Goddard in June 2019. The company emphasizes that security must begin at the build stage—through rigorous adversarial testing of AI models—and persist throughout the operational phase. This dual-layer strategy is designed to mitigate vulnerabilities from initial development all the way to real-time operations. As a result, TrojAI’s solution consists of two main offerings: TrojAI Detect and TrojAI Defend.

TrojAI Detect: This is Troj’s penetration testing product that assesses model risks. It is an AI red teaming solution that comes with more than 150 out of the box tests. Their solution also supports threats outlined in the OWASP Top 10 for LLMs as well as those identified by NIST and MITRE ATLAS. The TrojAI user interface can be seen as a competitive advantage as well as their ability to perform red teaming tests on multimodal outputs from LLMs. Popular open source red teaming solutions such as Nvidia’s Garak or Microsoft’s PyRIT require manual setup and tuning, lack many out-the-box datasets, and do not offer automation or an accompanying UI. TrojAI’s UI provides clear and concise reporting and analytics, making it easier to see which attacks a model is vulnerable to and how they compare against established benchmarks. Due to these comprehensive features, TrojAI Detect functions as an advanced penetration testing platform for AI, ML, and GenAI models. TrojAI Detect can be deployed with any model on any cloud and can be self-hosted in a VPC on any cloud service.

TrojAI Defend is a runtime security product that identifies and protects AI threats in real time. It monitors for attacks such as prompt injection, jailbreaks, and denial of service. It’s an enterprise-grade platform that allows for easy integration and flexible deployment with a reverse proxy architecture that can be self-hosted in a customer VPC on their cloud services of choice. TrojAI Defend acts as an LLM firewall, monitoring inputs and outputs for sensitive data loss, prompt injection, data poisoning, denial of service attacks, jailbreaking, and more. TrojAI Defend can filter an impressive 10 million or more tokens per second. TrojAI Defend is deployed fully in a customer’s environment (typically their VPC) so prompts and data do not have to be sent to TrojAI. This also helps keep latency low. TrojAI Defend provides policies that can be used out of the box or customized to control actions when TrojAI Defend finds an attack or a risk. These actions can allow, block, redact, or report on findings. This helps businesses fine tune detection and responses to their unique needs. TrojAI Defend also supports custom rule creation using regex, blocklists, and LLMs to further help businesses tune AI detection and response to their business needs.

Our assessment:

One of the distinguishing features of the TrojAI platform is its extensive customization options. Enterprises can tailor the security controls by integrating their own rules, data sets, and even custom classifiers. Additionally, the platform is designed to seamlessly integrate with existing security ecosystems. It supports standard protocols such as syslog and Kafka and can interface with third-party systems like Splunk, thus enhancing its utility in diverse, regulated environments.

TrojAI is clearly targeting large, complex enterprises, including Global 2000 and Fortune 500 organizations, that are actively building mission-critical AI applications. Further recognizing the stringent data privacy and compliance requirements of large enterprises, TrojA deploys its solutions as containerized software within a customer’s own cloud environment. This self-deployment model—whether on Azure, AWS, or Google Cloud—minimizes latency and alleviates concerns around data exposure, as the sensitive inputs and outputs remain within the customer’s infrastructure.

TrojAI has a robust partner program with opportunities for technology partners, consulting partners, and channel partners. TrojAI was recently selected to be a part of the Pegasus Program which is an invite-only initiative offered by Microsoft for Startups. These strategic partnerships, coupled with a compelling user interface and a product that supports both build-time and runtime security, position TrojA for continued success.

B) Securing AI Usage

Prompt Security

Prompt Security is a comprehensive solution for GenAI security helping to secure employees use of AI and also homegrown AI applications. They are a Series A startup founded in August of 2023 with over a dozen Fortune 500 enterprises as customers already. They offer a comprehensive solution that spans the entire spectrum of generative AI use—from employee tool usage and developer environments to homegrown applications—addressing the specific risks inherent in each context across the enterprise. Their platform scans every prompt and response and is designed to manage risks ranging from shadow AI and Data Leakage to prompt injections and insecure output handling.

Prompt Security’s product suite is organized into three distinct lines that address specific challenges across the organization.

1. Prompt for Employees

For employees, the platform is designed to monitor and manage the use of generative AI tools, ensuring that unsanctioned or high-risk applications are detected and controlled. It automatically scans and sanitizes employee interactions with these tools to prevent the accidental leakage of sensitive information such as revenue data or personally identifiable information. This continuous oversight not only helps in identifying shadow applications but also enforces tailored security policies across departments and roles, thereby reducing overall risk and ensuring data privacy in real time with redaction of sensitive data but maintaining the productivity gains brought by AI.

Prompt Security helps enterprises gain observability and visibility of AI use by employees in the organization. Prompt Security can detect shadow AI use and provides granular policies for AI governance that can be set per department or user. It also supports AI components within other tools such as Notion AI, Adobe or HubSpot. A unique differentiator is that they also help educate employees on the risk associated with their actions. This helps them learn which AI tools are okay to use and what data is acceptable to share with each. This coupled with proactive blocking of risky AI apps, redacting PII, and redacting sensitive data helps protect enterprises from data loss and compliance violations. Notably, Prompt Security, thanks to its dynamic detection mechanism for AI tools, detected DeepSeek as a high risk AI tool one year ago, making them one of the first to raise concerns about DeepSeek. The company’s database of over 10,000 AI tools grows on a daily basis thanks to this mechanism.

2. Prompt for AI Code Assistants

Prompt Security focuses on securing the development lifecycle by integrating with AI-based code assistants (such as GitHub Copilot or Cursor). The platform performs bidirectional scanning on both the inputs and outputs of these tools, effectively preventing the exposure of sensitive code, API keys, and intellectual property. This proactive approach helps organizations avoid compliance issues and audit failures by ensuring that all interactions within the development environment are continuously monitored and sanitized. By redacting and anonymizing sensitive data as it flows through the code assistance process, the platform provides a robust safeguard that supports both innovation and regulatory compliance.

A common challenge for enterprises has been security concerns blocking the onboarding of AI coding assistants. These copilots can 10x developer productivity but present certain data security challenges. These tools, while incredibly useful, often send prompts and data out to third party LLMs via APIs. Additionally, they inspect responses to make sure no vulnerable code is generated, as well as inspect the responses from GitHub Copilot to make sure the developers don't include vulnerable or hazardous code back that's been AI-generated.

This presents significant data privacy concerns with companies wanting to protect their code and intellectual property. Prompt is able to sit in the middle of developer IDEs and AI code assistants and act as a reverse proxy. This proxy can block and redact secrets as well as provide alerting and enforcement of policies.

3. Prompt for Homegrown GenAI Applications