The Convergence of SIEMs and Data Lakes: Market Evolution, Key Players and What’s Next

Key actionable steps on how security leaders can adapt to the evolution of SIEM and unlock a modern SOC strategy

Authors

Aqsa Taylor is the Chief Research Officer at Software Analyst Cyber Research, where she leads research initiatives and security leaders community.

Francis Odum is the Founder/CEO of the Software Analyst Cyber Research, where he leads the firm’s research and engagement with cybersecurity leaders.

Rafal Kitab is a SOC and Incident Response leader at ConnectWise with extensive experience working as a Security Analyst, Engineer, Architect, Incident Responder and recently a Director. He brings considerable experience in Security Operations and shares his first hand experiences.

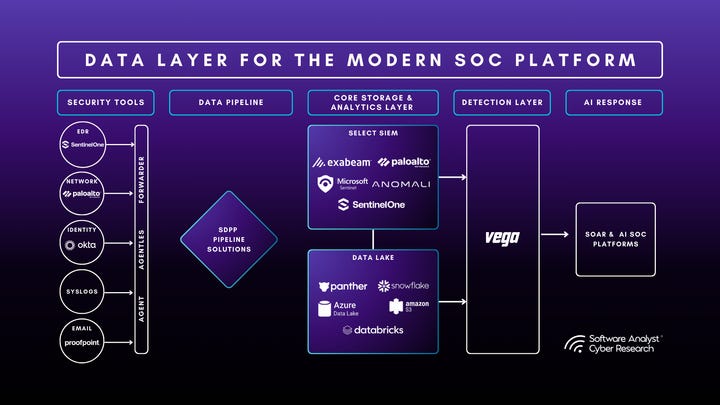

The SIEM market is undergoing one of its most significant shifts in decades, driven by the rise of security data lakes, pipelines, and advanced analytics platforms. What began as centralized log management has expanded into a battle over who controls the SOC’s data layer—traditional SIEM vendors, cloud-native data platforms, or emerging security data pipeline providers. This report explores the convergence of SIEMs and data lakes, the competitive dynamics shaping the market, and the key players redefining how enterprises detect, analyze, and respond to threats.

We further explore how the SIEM is being modernized in 2025 to address legacy concerns, including rising costs, noisy data, and expanding SOC requirements. It serves as a field guide for CISOs, SOC leaders, and practitioners evaluating modern SIEM models. The report defines the core architecture patterns shaping the market, including pipeline-first designs, decoupled compute and storage with data lakes and federated query layers, and converged platforms that unify SIEM with UEBA, SDPP, XDR, SOAR, and exposure management. We’ve collaborated with a select number of large SIEM providers to evaluate their solutions in-depth.

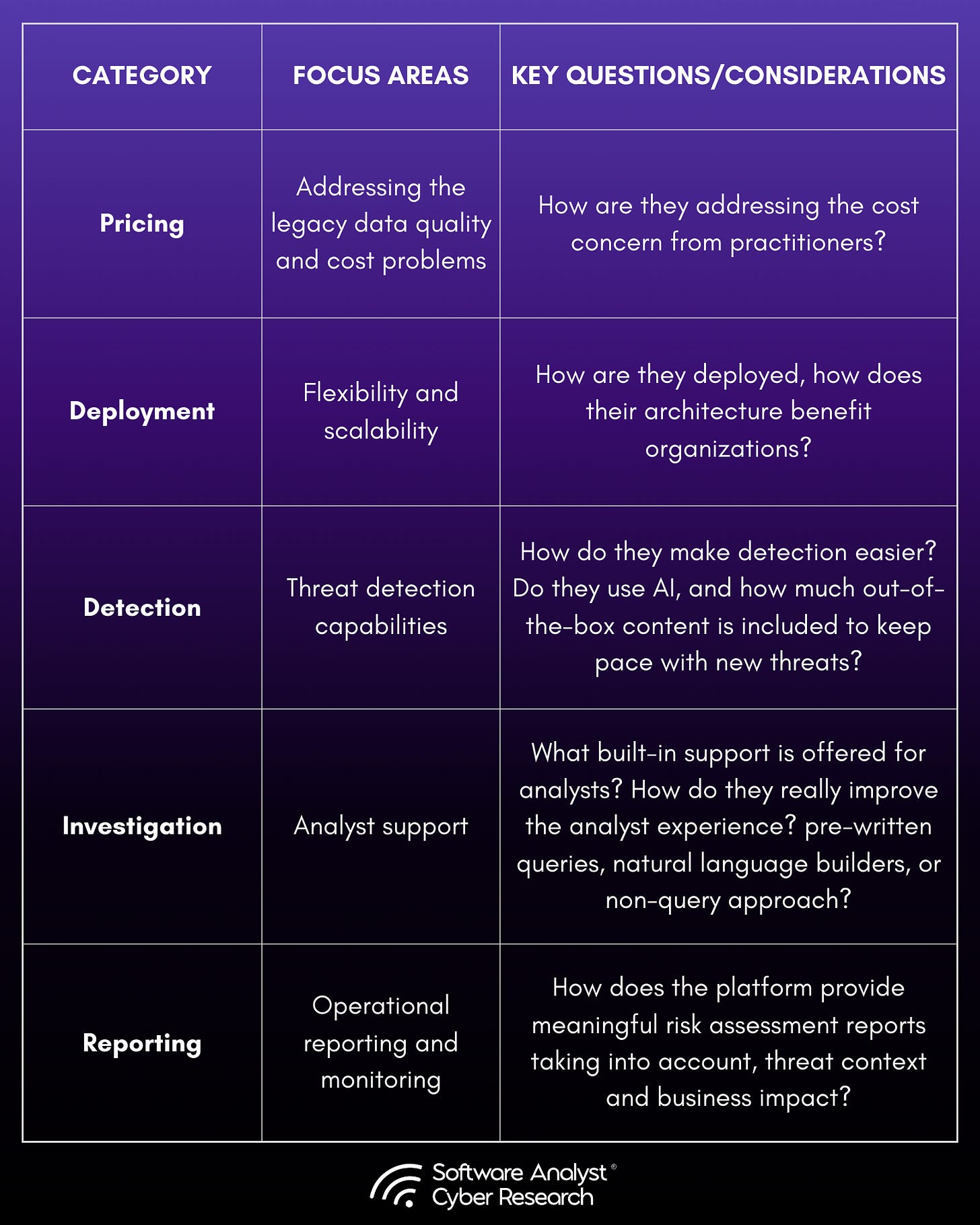

We conducted an in-depth analysis of many vendors. This report maps seven vendors to real-world use cases and SOC maturity, and uses our PDDIR framework (Pricing, Deployment, Detection, Investigation, Reporting) to compare how each tackles cost control, noise reduction, analyst efficiency, and openness. Backed by hands-on demos and questionnaires, it offers a practical decision playbook, clear selection criteria, and our opinion covering pipeline integration, pricing model shifts, AI for the analyst, and evolution of modern SIEM architecture.

Introduction

Our Market Guide 2025: The Rise of Security Data Pipelines & How SIEMs Must Evolve and SACR AI SOC Market Landscape For 2025 reports set the stage for the major shifts we see in the Security Operations world, with SIEM at the center of it. Practitioners have been vocal about the rising costs, the operational overhead of rule management, and the alert fatigue with noisy logs. SIEM budgets are increasingly challenged by contenders such as XDRs, security data lakes, security data pipelines, and other security analytics and operations platforms. Yet one thing is certain: SIEM platforms are here to stand their ground. However, what defines a modern SIEM platform is now far more demanding.

What once began as a straightforward logging and analytics tool has transformed into one of the most complex and expensive platforms in the SOC. Vendors are advancing SIEM with deeper ties to data pipelines, AI-driven capabilities, modular designs, and a sharper focus on the analyst experience. What stands out in 2025 is how each leading provider is pushing the SOC forward in their own way.

This report highlights the major trends shaping SIEM in 2025 and what they mean for security teams. We look at how SIEM delivers value today, where it must evolve, and the characteristics that set modern platforms apart. The analysis focuses on how vendors are addressing long-standing pain points, how AI is changing the analyst experience, and how new architectures are reshaping the role of SIEM in the SOC.

To ground these themes, we assessed a set of vendors through demos and questionnaires using our comprehensive PDDIR (Pricing, Deployment, Detection, Investigation, Reporting) framework to show how these shifts are playing out in practice and what factors security leaders should consider when making decisions.

Actionable Summary

SIEM is not disappearing, but transforming: Despite concerns with pricing and volume bloat, along with pressure from XDRs, data lakes, and security data pipeline platforms, SIEM remains central to the SOC; its definition has simply expanded.

Cost and complexity remain top concerns: Rising data volumes and ingestion-based pricing models push buyers toward platforms that offer predictable costs, flexible storage, and reduced management overhead.

Security data pipelines are reshaping SIEM: Filtering, in-stream detections, broader integrations, normalization and better data quality via SDPPs are now essential capabilities, helping to cut costs and reduce noise.

Architectural shifts are accelerating: Vendors are moving from monolithic stacks to decoupled or distributed designs that separate compute and storage, enable federated queries, and give customers more control.

AI is moving from hype to utility: Copilots, natural language detections, and automated investigations are being built into SIEMs, lowering the barrier for detection engineering and reducing analyst fatigue.

Pricing innovation is critical: Many modern SIEM platforms are experimenting with pricing models based on data sources, utilization, or filtered events, in contrast to legacy ingest-based approaches.

Convergence is reshaping the SOC: SOAR, XDR, exposure management and now SDPPs are being pulled into SIEM, making it less of a standalone product and more of a unified operating layer.

Market split is emerging: SIEMs are evolving in two directions: open, decoupled overlays that maximize flexibility, and tightly bundled ecosystems that maximize integration.

From Legacy to Modern SIEM: Setting the Stage

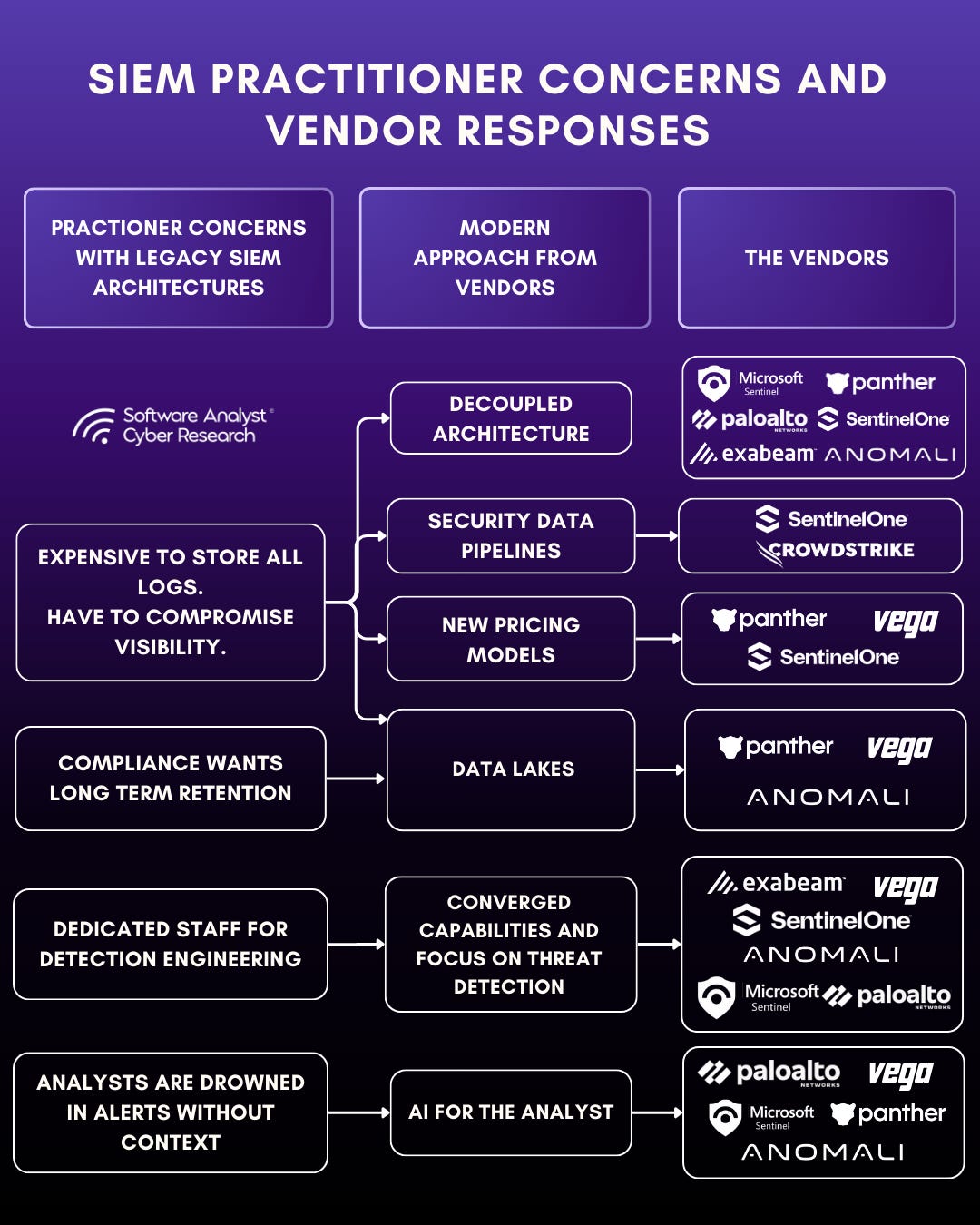

Practitioners have made it clear: costs keep rising, data is noisy, and analysts are drowning in management overhead. Yet SIEM isn’t going away. It’s adapting! Taking on these challenges head-on with new architectures and smarter workflows. Although, in a modern SOC architecture, SIEM is no longer the stand alone player. It’s becoming part of a bigger ecosystem, complemented by pipelines, data lakes, automation workflows, and adjacent platforms such as SOAR and XDR. To briefly summarize what led to the shift in modernization of SIEM platforms :

The Cost Problem

Data volumes keep rising as cloud adoption grows, and legacy SIEM pricing forces teams to choose between visibility and cost.

What organizations want:

Noise reduction capabilities - help separate signal from noise

Predictable pricing that avoids hidden cost bloats

Full visibility without blind spots

Long-term retention without penalties

The Overhead Trap

Legacy SIEMs are resource-heavy, demanding constant upkeep and specialized staff just to stay operational.

What organizations want:

Faster onboarding with minimal manual setup

Out-Of-The-Box content that reduces Time to Value

Less reliance on dedicated detection engineering staff

Automation that reduces day-to-day overhead

Analyst time focused on investigation and response

The Analyst Dilemma

An industry research reveals that anywhere from 45% to 80% of security alerts are false positives. That is, 2 to 4 false alerts for every legitimate threat they uncover. It also states that analysts spend nearly three hours manually triaging them.

What organizations want:

Reduced alert fatigue - better signal on threat

OOTB threat detection content that keeps up with changing threat landscape

Easy way to build custom threat detections

Guided investigations with clear context

Real reduction in repetitive, manual work

Market Shifts and Competitive Trends

In 2025, vendors are re-defining what a SIEM platform should look like by directly tackling the problems organizations have long faced with legacy tools. Some ways they are doing so:

Stronger focus on Security Data Pipelines

We reported earlier how Security Data Pipeline Platforms are quickly becoming a core component of the SOC architecture in our Market Guide 2025: The Rise of Security Data Pipelines & How SIEMs Must Evolve report. This has been proved true with the recent CrowdStrike’s acquisition of Onum and SentinelOne’s acquisition of Observo AI which highlight how vendors are moving toward this pipeline-first approach to address “noisy data” and cost concerns.

Coupling Security Data Pipeline Platforms (SDPPs) into the SIEM fabric elevates the platform beyond the traditional SIEM mold by addressing key shortcomings of legacy architectures -

Filtering at ingestion – Traditional SIEM platforms lack data quality controls at ingestion, leading to increased storage and analytics costs. Integration with SDPPs bridges this gap by filtering unwanted data at the source before it is saved in SIEM storage.

Broader ingestion coverage – SDPPs integrate with a wider range of data sources, helping expand the integration coverage of SIEM platforms.

Detections in the pipeline – By enabling in-stream detections, SDPPs significantly reduce Mean Time to Detect (MTTD) by avoiding storage indexes and processing delays.

Cheaper storage options – Some SDPPs include built-in data lakes and cold storage capabilities, providing more cost-efficient options for long-term data retention.

Avoiding vendor lock-in – SDPPs are built on open standards, allowing data to be routed to any destination. When paired with SIEM platforms, they can also simplify migrations.

In October, we will publish a report that takes a deeper dive into the SDPP market trends and insights.

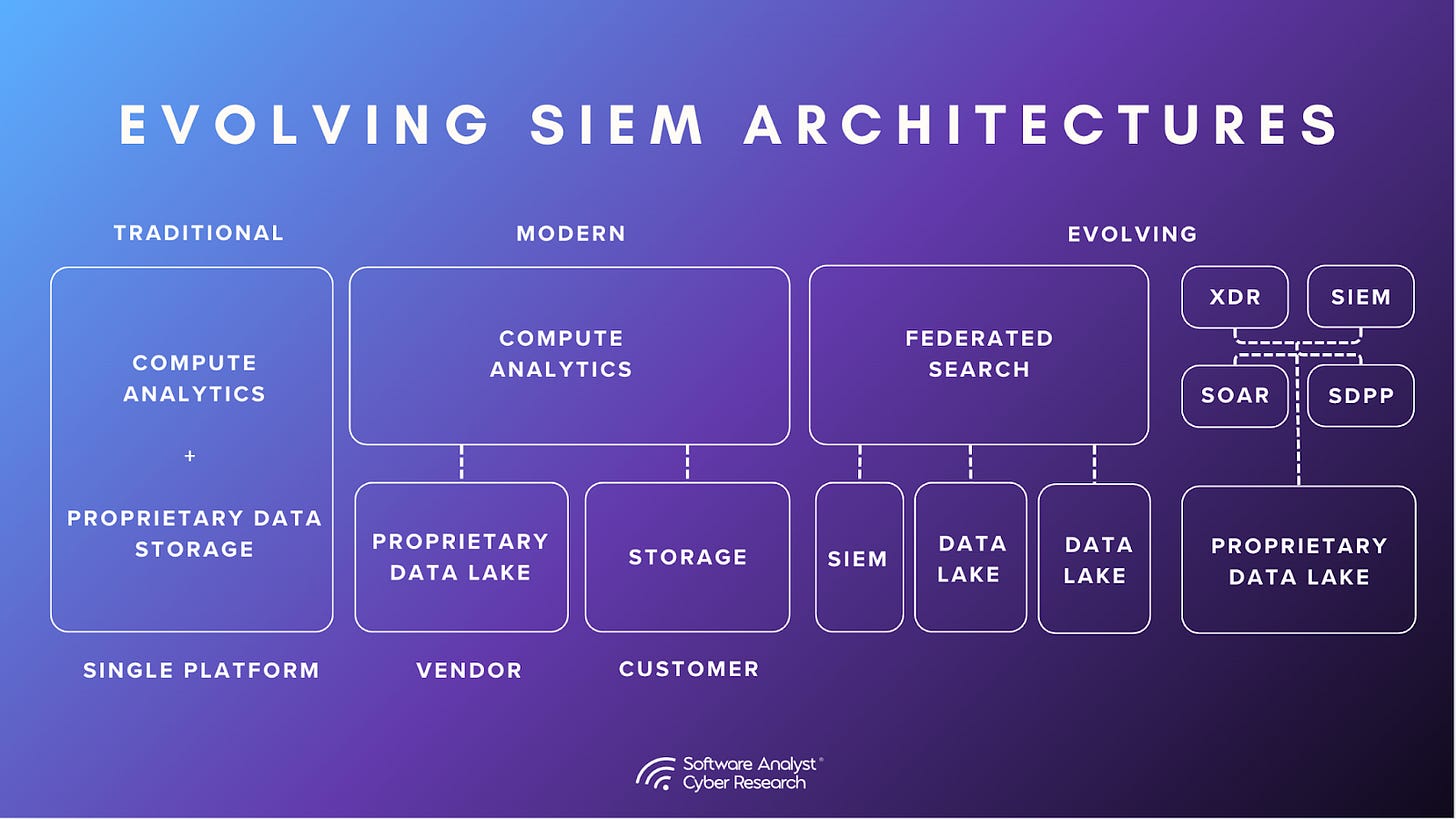

Emergence of Decoupled SIEM Architectures

Historically, SIEMs provide a monolithic architecture without tiering - handling ingestion, normalization, and analysis in a single stack. Now, vendors are rolling out decoupled architectures that separate storage from compute, giving more flexibility and ownership of costs to the customers. Verdict is still out if such a move reduces pricing or makes it convoluted, however one thing is certain - it gives customers the flexibility to choose which was not an option before.

Data lakes are gaining popularity: Microsoft Sentinel Data Lake, SentinelOne’s Singularity Data Lake, AWS Security Lake, and now Splunk’s Machine Data Lake, to name a few. The goal is to address practitioners’ pricing concerns by providing cost-effective ways to store data long term while still enabling analytics at reasonable speeds. At SACR, we view the market as being in a transitional stage: while data lakes are becoming more common, they are still most often coupled to a single analytics engine.

With Data lakes, come federated searches. We also see an emergence of the “query layer” model in reshaping how security teams think about data management in SIEM. Instead of forcing all telemetry into expensive analytics storage, some emerging platforms, such as Vega Security, are moving beyond this by building technology-agnostic SIEMs that can operate across data sources, no matter where the data resides. In an ideal scenario, this creates an ecosystem where organizations can select the best-of-breed analytics platform alongside the best-of-breed data management solution. At minimum, it offers flexibility, allowing security data to be retained in any environment, including isolated or highly regulated ecosystems.

AI for the Analyst

AI is shifting from hype to utility. AI is being built into SIEM platforms at scale. The trajectory looks similar to Security Orchestration, Automation, and Response (SOAR), which started as standalone products before being absorbed into SIEM. Several vendors already demonstrated mature AI features embedded into their platforms.

Natural language processing is now a basic feature. More advance AI features include -

Agentic triage and investigations: AI summarizes alerts, stitches timelines, assigns risk, and suggests next steps. Improves MTTD and MTTR.

Guided builders and copilots: Step-by-step assistants for rule creation, hunt design, and case work. Speeds onboarding and reduces reliance on specialists.

Playbook synthesis and no-code response: Turn findings into executable workflows, with auto-generated or recommended actions. Accelerates containment and recovery.

This raises questions about the future: will dedicated AI SOC vendors remain a separate category, or will they be folded into SIEM like SOAR was? With natural language detection builders, copilots, and guided workflows, aimed at helping analysts create detections faster, investigate more efficiently, and spend less time on repetitive triage, AI is quickly becoming an expected integration in platforms. Check out our article on SACR AI SOC Market Landscape For 2025 to dive deeper into this conversation

New Pricing Models and Converged Capabilities

Other SIEM platforms are addressing the visibility vs cost problem by moving away from legacy ingest based pricing. We see models that are Integrations based, pay for filtered data, or custom in-house data lake models aimed at giving security leaders more predictable costs without forcing compromises on visibility or retention.

Capabilities like SOAR and XDR are have been pulled into the SIEM ecosystem for a long time and now we see even more cross convergence with tangential platforms, bringing in capabilities like UEBA and Data Pipelines. This convergence reduces tool sprawl and allows teams to detect, investigate, and respond within a unified workflow. Sentinel’s deeper integrations with Microsoft Defender, Cortex XSIAM’s integration with XDR, and similar moves from other vendors illustrate how the SIEM is evolving into a central operating layer for security operations, less a standalone product and more a platform where detection, investigation, and response converge.

Assessment methodology

For security leaders, the question is no longer whether to move past legacy SIEMs, but which modern approach fits their future. This report analyzes how vendors are redefining SIEM and provides insights to help CISOs and security teams navigate the shift and make informed decisions for the decade ahead.

We took a closer look at a handful of representative vendors, to anchor these themes in practical, real-world approaches. The vendors that we looked at (in alphabetical order) are:

Anomali

Exabeam

Microsoft Sentinel

Palo Alto Networks Cortex XSIAM

Panther Labs

SentinelOne

Vega

To evaluate how these SIEM vendors are addressing the concerns from legacy SIEM approaches, we built a structured assessment process grounded in real operational needs and use cases. Each platform was measured against a broad set of criteria covering the most important functional, technical, and operational aspects of SIEM.

Disclaimer:

The outcome of this assessment is not to place SIEM platforms in a tiered ranking, but to highlight how each is applying innovative approaches to address practitioner challenges and advance modern security operations.

SIEM vendors Assessment

Our questions were designed to uncover how vendors are delivering on modern SIEM capabilities: how they are breaking from legacy architectures and pricing models, how they differentiate themselves in approach, and how they respond to the concerns practitioners consistently raise about cost, complexity, and visibility. This framework highlights not only what sets each platform apart, but also how SIEM as a category is evolving. In Alphabetical order -

Anomali SIEM

Anomali SIEM, a core component of the Anomali AI-Powered Security and IT Platform, differentiates itself from traditional platforms by placing threat intelligence at the core of its design. Instead of focusing only on log collection and correlation, it enriches data with threat intelligence context, helping analysts prioritize detections tied to relevant threat actors, industries, or geographical regions. This functionality is delivered via the Open Data Lake, which is included with the platform. They also offer a decoupled architecture - where compute and storage modules are separated.

Another differentiator is their speed in retrieving data, even if stored long-term (7+ years). Practitioners have often raised concerns about the time required to retrieve data from legacy platforms, for historical investigations. With Anomali, there is no tiering in their storage; all data is stored as hot ( seven+ years). During the demo, we saw it retrieve years of data within seconds.

On the detection side, Anomali introduces the Anomali Query Language (AQL), supported by Anomali AI that translates natural language into structured queries, aimed at lowering the learning curve for analysts. In addition to the NLP function, Anomali AI can also extract IOCs from webpages, assist in predictive analytics, and summarize complex logs and intel briefs for rapid analysis & consumption. Content can be tailored during onboarding to match industry-specific threat patterns, and MSSPs can manage multiple tenants through the Anomali Platform. Anomali also reports very fast search performance across billions of records, allowing rapid pivoting and investigation at scale. Taken together, these capabilities position Anomali as a SIEM option focused on threat intelligence, cost efficiency, and speed.

Cost Effectiveness

Anomalis’ decoupled storage and compute architecture avoids the traditional ingestion-based pricing traps that often force clients to choose which data is “worth” keeping. Instead, all data can be ingested into the open data lake, including non-security sources, without immediate financial pressure. Pricing is positioned as 40–60% lower than comparable SIEMs, and because all data remains “hot” for seven+ years, customers avoid surprise retention costs. This approach allows organizations to focus on visibility.

Deployment

The platform supports flexible deployment across SaaS, hybrid, air gapped, and on-premises environments, with government customers often favoring the latter. Out of the box, Anomali integrates with over 125 standard log formats and most mainstream firewalls and endpoint security solutions, while also offering custom parsers where needed. Data collection is enriched by Anomali’s threat intelligence “Match” function, which automatically correlates log data with known malicious indicators. Integrations extend downstream as well, enabling automated responses such as pushing high-confidence IoCs directly into EDR or firewall systems for blocking. These are prioritized through a composite confidence score, which blends Anomali’s own intelligence with feeds from partners like CrowdStrike, Recorded Future and others. The platform is based on an open, unified data lake. Data is stored in open formats, which limits vendor dependency and allows scaling flexibility. The data lake remains under the customer’s ownership and governance, including storage and access policies.

Detection

During onboarding, customers receive tailored content (dashboards, alert rules, and pre-built queries) aligned with their specific industry and region. Threat actor profiles and campaign intelligence are integrated into detection engineering, helping teams to focus on the most likely attack patterns. Analysts interact with detections primarily through AnomaliQuery Language (AQL), which is designed for scale and speed, but can be accessed more intuitively via the Anomali AI that translates natural language questions into AQL queries. Queries can be saved, reused, and converted into alerts with flexible look-back periods and throttling options. Although detection-as-code is not yet available, JSON-based content deployment is supported today, and MSSPs gain additional leverage through the platform for managing detections across multiple tenants.

Investigation

Investigation workflows are enhanced by AI and contextual intelligence. The Anomali AI guides analysts by generating or refining queries, summarizing alerts, and accelerating triage. The system enriches alerts with MITRE mapping, event entity details, and known threat actor context, giving analysts a head start on investigations. Collaboration is supported through features like “trusted circles,” which allow organizations in parent-child or partner relationships to share indicators and reports. Together, these features reduce time spent on manual enrichment and enable analysts to work from a higher-value starting point.

Reporting

On the reporting side, Anomali’s architecture allows for both granular searches and broad historical queries, with nearly infinite horizontal scaling. This ensures SOC teams can track metrics over time without being restricted by storage limits or forced into archival tiers. Operational health is managed through efficient storage mechanisms that minimize data usage while keeping all data immediately accessible. The result is a platform that supports both the compliance-driven need for long-term retention and the operational reality of quick look-backs during investigations.

Strengths

Strong focus on threat intelligence as the foundation of the platform, enabling more relevant detections.

Probably the fastest search we’ve seen

Decoupled storage and compute architecture eliminates ingestion-based pricing constraints.

Flexible deployment options across SaaS, hybrid, Air-Gapped, and on-premises.

Tailored onboarding content aligned to industry and geography.

Open Unified Data Lake, supporting open formats

Areas to Watch

Detection-as-code is not yet fully supported (currently only JSON-based deployment).

Market presence is smaller compared to Microsoft and Splunk, meaning fewer community-created detections and integrations.

Although storing all data in hot storage can improve retrieval speeds, it raises cost concerns as hot storage can be more expensive, compared to cold storage. Anomali’s approach to addressing this concern is with their open data lake model and decoupled architecture.

Exabeam

Exabeam positions itself as a SIEM built on a foundation of user and entity behavior analytics (UEBA), generative and agentic AI, setting it apart from platforms that rely more heavily on static correlation rules and chatbots. Instead of surfacing isolated alerts, Exabeam applies behavioral models and risk scoring to detect anomalies and link related activities into timelines, providing analysts with broader incident context and reducing alert fatigue. This UEBA-driven approach remains a core differentiator, but the company’s strategy has expanded significantly following its merger with LogRhythm.

Through the merger, Exabeam now combines its cloud-native platform with LogRhythm’s mature on-premise SIEM, creating a portfolio that spans cloud, on-premise, and hybrid deployments. The on-premise product (LogRhythm) continues to be one of the few fully self-hosted SIEMs available outside of Splunk, while the Exabeam New-Scale Platform provides scalability and rapid feature releases. Together, these offerings form what the company describes as a “one-two punch” for customers .The hybrid option, branded LogRhythm Intelligence, blends Exabeam’s UEBA capabilities with on-premise control, a differentiator for regions or industries where data residency and cost of ownership make self-hosting attractive.

Cost Effectiveness

Exabeam emphasizes cost-effectiveness through automation of both internal and external SOC workflows and reduced analyst workload. By embedding Exabeam Nova agents throughout the TDIR workflow, Exabeam helps reduce manual effort. The merger also bolstered cost flexibility: self-hosted LogRhythm customers benefit from predictable licensing and lower total cost of ownership, while the Exabeam cloud platform delivers scale by separating compute and storage. Executives highlight their ability to win large deals against Splunk on TCO grounds, especially for customers sensitive to long-term infrastructure costs.

Detection

Exabeam builds on its UEBA heritage with hundreds of behavior models, correlation rules, and anomaly detection mechanisms. Risk scoring links activity into timelines mapped to MITRE ATT&CK, giving analysts contextualized detection outcomes. Customers can extend or customize models, while out-of-the-box content accelerates time-to-value. Post-merger technology integration has also introduced AI-driven features, such as Exabeam Nova, a team of fully-integrated AI agents built for autonomous security operations. Exabeam Nova automates threat detection working in tandem with a self-learning, self-tuning, machine-learned analytics engine, combined with AI agents for real-time risk scoring and triage. Recent partnership integrations with Cribl and DataBahn further reinforce Exabeam’s strategy of positioning as an open platform that can analyze data wherever it resides, not just in its own stack. These partnerships also support end-user flexibility and efficiency, allowing customers to decouple long-term storage from their SIEM to leverage low-cost 3rd-party data lakes.

Investigation

Exabeam centralizes analyst workflows in its Threat Center, combining triage, investigation, and case management. Risk-scored timelines help analysts pivot across users, devices, and accounts. Exabeam Nova agents help reduce analyst effort by summarizing alerts, collecting evidence, and triaging threats. Exabeam Nova also suggests next steps, guiding customers to interact directly with the system for guided security outcomes. The quarterly release cadence for the LogRhythm product and monthly updates for Exabeam’s New-Scale platform have resulted in rapid delivery of new features, with more than 250 added in the last year, ensuring consistent improvements in investigation and response.

Reporting

Exabeam includes customizable dashboards for SOC metrics and prebuilt content for 28 compliance standards, which can be exported for audits or adapted internally. Exabeam Nova natural language processing allows users to create reports and dashboard visuals using plain language, saving time and effort. Multi-tenant capabilities support MSSPs, while the merged portfolio ensures both on-prem and SaaS customers can maintain visibility. Quarterly and monthly release cycles provide consistent updates to reporting and operational monitoring, ensuring parity between deployment models.

Strengths

Deep UEBA capabilities combining risk scoring and behavioral modeling

Automated timeline creation with open-ended correlation window

Broad portfolio spanning cloud, on-prem, and hybrid SIEM after LogRhythm merger

AI-driven enhancements like Exabeam Nova agents to automate manual TDIR workflows.

Outcomes Navigator, Advisor Agent, helping customers map use case coverage to desired outcomes, benchmark with industry peers, and justify additional security investments. Strong cost competitiveness against Splunk and others on TCO grounds

Frequent release cadence (quarterly for on-prem, monthly for cloud) ensuring innovation

Areas to Watch

Lack of transparent, public pricing

Past concerns over churn following the merger, though executives now report stabilized renewals and record quarters

Heavy reliance on AI and UEBA requires SOC process adjustments

Microsoft Sentinel

Microsoft Sentinel is a cloud-native SIEM built entirely on Azure, designed to take advantage of Microsoft’s global cloud infrastructure and tight integration with the Defender product family, Entra ID, and Microsoft 365. Instead of requiring traditional SIEM deployment, Sentinel is provisioned directly as an Azure resource, giving organizations immediate access (no license required) and alignment with existing Microsoft security controls. Its pricing model is based on data ingestion, which offers flexibility and discounts through features like tiering, filtering, commitment tiers and data lake storage, but it can also lead to unpredictable costs if not carefully managed. On the detection side, Sentinel benefits from a rich ecosystem of community-driven content written in KQL, Microsoft’s query language, which has become a de facto standard in the security industry. The platform also emphasizes automation, with Azure Logic Apps enabling low-code SOAR playbooks. Sentinel’s combination of cloud-native scale, deep ecosystem integration, and MSSP-friendly features like Azure Lighthouse makes it one of the most widely adopted SIEMs, though its reliance on the Microsoft stack and ingestion-based pricing remain important considerations.

Cost Effectiveness

Microsoft takes a pay-as-you-go pricing model approach tying costs to data ingestion but it aims to address ingest-based pricing concerns with data caps, DCR (data collection rules), filtering, and access to data in low-cost data storage option via Microsoft Sentinel data lake. Data can be stored in Data Lakes or Log Analytics Workspaces. Options for private link connections and customer-managed clusters are available in Log Analytics with client-controlled encryption keys.

Deployment

Microsoft Sentinel runs exclusively in Azure as a customer-owned resource and integrates deeply with Microsoft Defender, Entra ID, Microsoft 365, and other native services, while also supporting most major third-party security tools.

Microsoft takes the approach of convergence of capabilities in the SIEM - combining Defender XDR capabilities within Microsoft Sentinel. For MSSPs, Sentinel offers multi-tenant management through Azure Lighthouse or the MTO portal, which make it one of the more, if not the most, MSSP-friendly SIEMs. While Sentinel lacks flexibility in deployment models, it compensates with strong alignment to organizational security controls in areas such as data management and network protection.

Detection

Sentinel provides out-of-the-box detection content, published in Kusto Query Language (KQL). Detection content is organized in the “Content Hub” by data source or theme (e.g., Endpoint Security Essentials, SOAR Essentials)

Content can be managed as code through GitHub or Azure DevOps, enabling version control and structured workflows. Threat hunting module supports both pre-written and custom KQL queries, with options to re-run and compare results

It includes search and query builder functions to support analysts with varying levels of KQL proficiency. Detections can be mapped to the MITRE ATT&CK framework. Analysts can monitor detection rule health, review an audit trail of changes, and adjust rules as required.

Investigation

Sentinel’s Alert overview provides access to past alerts, comments, and resolution steps. It relies on KQL for investigations and supports pivoting between related attributes (e.g., hostnames, IP addresses, user accounts).

Sentinel has native case management but also supports integration options for external platforms like ServiceNow and Jira. Sentinel’s “Tasks” feature allows embedding of standard operating procedures into workflows.

Sentinel’s User and Entity Behavior Analytics (UEBA) enables anomaly detection across diverse data sources, while the Notebooks feature supports advanced analysis and machine learning in Python. Additionally, Sentinel integrates seamlessly with Azure Logic Apps, providing a low-code solution that simplifies the creation of SOAR playbooks and lowers the barrier to automation.

At the time of writing this article, Sentinel does not include built-in AI functionality for summarizing or triaging alerts. This feature is accessible through a different product - Microsoft Copilot for Security which costs extra and is charged based on utilization through the use of SCUs (Security Compute Units).

Reporting

Built-in dashboards include SOC metrics such as mean time to detect, mean time to respond, and alert volumes Audit and diagnostic logs provide operational monitoring across platform components. Reporting data for MSSPs can be aggregated through Azure Lighthouse or the MTO portal where MSSPs can pivot between their customers.

Strengths

Deep integration with the Microsoft ecosystem, particularly Defender products, Entra ID, and Microsoft 365.

Cloud-native design with scalable ingestion and storage options.

Rich out-of-the-box content and strong community support around KQL.

Flexible automation through Logic Apps and strong SOAR integration.

Granular access management aligned with Azure IAM.

MSSP multi-tenancy support via Azure Lighthouse.

Areas to Watch

Deployment restricted to Azure, limiting flexibility for hybrid or multi-cloud strategies.

Consumption-based pricing model can lead to unpredictable costs if not carefully managed.

Cost-optimization features require expertise to configure effectively..

Investigation features are advanced but lack some of the AI-driven capabilities offered by emerging competitors.

Palo Alto Networks Cortex XSIAM

Cortex XSIAM (Extended Security Intelligence and Automation Management) is Palo Alto Networks’ cloud-native security operations platform that brings together SIEM, XDR, SOAR, NDR, ITDR, CDR, and exposure management in a single environment. It centralizes telemetry from endpoints, networks, identity systems, cloud services, and third-party tools, normalizing data into a common model to enable consistent detection, investigation, and response. Detection combines behavioral analytics, correlation logic, and threat intelligence, supported by machine learning models that group related alerts, score incidents, and provide playbook-driven response options. Investigations are organized into a case-based model where events are automatically stitched into timelines to reveal attack sequences, while integrated exposure management helps organizations identify and prioritize vulnerabilities before they are exploited. The platform is designed to reduce manual analyst workload through automation and to provide a consolidated view of security operations across diverse data sources.

Cost Effectiveness

Palo Alto Networks does not publish detailed public pricing, but customers can purchase it as part of broader solution packages, such as together with Cortex XDR and other modules, which often provide cost advantages compared to standalone solutions. The platform emphasizes value through automation and efficiency, aiming to reduce analyst workload by correlating events automatically, prioritizing high-risk incidents, and embedding playbooks for common tasks. Customers report that when deployed as part of Palo Alto Networks’ broader suite, the overall cost can be significantly lower than using multiple vendors. An online ROI calculator allows customers to estimate their total cost savings and return on investment.

Deployment

Cortex XSIAM is delivered as a cloud-native SaaS service within the Cortex ecosystem. It ingests data from a wide variety of sources, including endpoints, firewalls, identity systems, cloud services, and third-party tools, including EDR. While Palo Alto Networks recommends its own agent to achieve the highest level of data granularity, it also supports integrations with external providers.

The architecture includes both cloud collectors for SaaS and infrastructure data, and BrokerVM collectors for on-premises environments. Data is normalized through a common information model, ensuring consistent structure across ingestion pipelines. This design allows organizations to operate in hybrid environments while still using XSIAM as a centralized SOC platform.

Detection

Detection capabilities in Cortex XSIAM are built around a combination of threat intelligence, ML-based analytics, and customizable rules. The system uncovers threats with 10,000+ detections, 2,600+ ML models, and IOC-based indicators, correlating events across diverse data sources for deeper insight. Detection rules can be extended or fine-tuned by analysts, and global detection content is updated continuously.

Behavioral sensors are utilized by the analytics engine to monitor user, endpoint, and network activity, surfacing anomalies and scoring them according to risk. All detections and security events are mapped to the MITRE ATT&CK framework, giving analysts a familiar taxonomy to evaluate adversary behavior. AI is used to generate summaries of detection events, providing analysts with clear explanations and recommended next steps. To encourage trust, machine learning models are designed to be explainable rather than opaque, with clustering and scoring logic visible to analysts.

Investigation

Investigation workflows are centralized into a case-based model. XSIAM automatically groups related issues, such as security events, into cases to provide a complete picture of a security incident. Analysts work from the Cases page to review open investigations, assign ownership, and update statuses. Cases include timelines where events are automatically stitched together and scored, making it easier to see the sequence of actions leading up to an incident.

XSIAM provides multiple tools to support deeper analysis. The War Room offers collaborative triage for teams, while the Work Plan organizes investigative tasks step by step. Analysts can pivot across entities such as users, hosts, files, and IP addresses, and can retrieve additional details like asset information, indicator data, and malware analysis. Integrated hunting capabilities allow ad hoc queries through XQL, scheduled or saved queries, and dashboards with drill-downs.

Response is built into the investigation process through Palo Alto Networks’ native SOAR capability. Playbooks are embedded for common incident types, with options for manual, semi-automated, or fully automated execution. Analysts can take direct endpoint actions such as isolation, script execution, or file quarantine. Service level timers, custom statuses, and case resolution tracking provide operational control over the investigation lifecycle.

Reporting

Reporting features include dashboards for SOC operations, exposure management, and incident activity. The exposure dashboard applies a prioritization funnel that filters large volumes of vulnerabilities into a smaller set of actionable cases, using factors such as exploitability predictions and external intelligence. This helps organizations move from vulnerability overload to a manageable list of prioritized risks.

Operational reporting includes standard SOC metrics such as case resolution times, alert volumes, and investigation backlogs. Because XSIAM unifies data from multiple streams, reporting is consolidated in a single console, reducing the need for analysts and managers to aggregate data from multiple tools.

Strengths

Cloud-native architecture designed for scale and flexibility.

Ability to unify diverse data pipelines into a single timeline for analysis.

Native SOAR at no additional cost.

AI applied in explainable ways, with event summaries and recommendations that retain transparency.

Strong threat intelligence derived from Palo Alto Networks’ global customer footprint.

Case management for investigations that improves context and collaboration.

Exposure management helps organizations identify, prioritize, and manage vulnerabilities across the attack surface including cloud, endpoints, and networks.

FedRAMP High authorization helps agencies transform security operations while meeting regulatory requirements.

Areas to Watch

Lack of transparent pricing information.

Market perception of Palo Alto Networks as primarily a network security vendor may create adoption challenges.

Reduced analytics quality when using non-Palo Alto agents in third-party ecosystems.Although it could also result from coverage gap in external sources.

Automation-first approach may require cultural and process adjustments for SOC teams accustomed to traditional SIEM workflows.

Panther

Panther takes a modern, security data lake approach to SIEM by separating compute from storage. They’ve also challenged the traditional ingestion-based pricing model by offering one based on the number of data sources. Practitioners have often been vocal about their issues with ingestion-based models, such as compromising visibility for the sake of price and dealing with unpredictable costs, so this model presents an opportunity to make pricing more predictable. Their decoupled architecture also allows for flexible deployment: Panther can be deployed either as a SaaS solution or within a customer’s own AWS environment.

Another interesting deviation Panther makes from traditional SIEM platforms is its detection-as-code approach, which uses Python (in addition to other low-code options) for detection content creation. We were surprised to see the Python approach but we see the value of introducing a widely known scripting language and enabling teams to treat detection logic like code, making it easy to version control, export, and import, bringing SIEM management in line with modern software CI/CD practices. While this may raise concerns for practitioners who are more familiar with traditional approaches, we feel the Python model could serve as a strong foundation for AI-driven detection and response.

Panther addresses practitioners’ concerns with operational burden and alert fatigue through its AI-driven features. With capabilities such as AI-powered alert triage, query generation, and rule building, Panther enables teams to codify runbooks, guide AI agents, and accelerate detection and response.

Cost Effectiveness

The pricing structure combines a platform fee along with the number of data sources, which scales with organization size (“starter,” “growth,” and “enterprise” tiers), with licenses for data sources purchased in bulk for predictable budgeting.

Panther employs a decoupled architecture and single-tenant deployment model, in which compute and storage are separated and managed independently from licensing. Self-hosted customers can apply their existing AWS commitments to cover storage and compute expenses, while SaaS customers are billed at retail rates for the infrastructure consumed, with Panther managing the underlying services.

This model is a significant shift from per-GB pricing, but may require planning in environments with many small, diverse log sources.

Deployment

Panther can be deployed in two ways: customer-hosted in AWS or as a SaaS solution managed by Panther.

Customer-hosted in AWS, giving organizations control over data residency and security while leveraging existing cloud discounts

SaaS deployment managed by Panther, offering a turnkey option for teams seeking simplicity

The platform supports forwarding logs to S3/Blob Storage/GCS or via intermediaries such as Logstash or Vector, with native collectors under development.

Security controls make heavy use of AWS regional isolation, ensuring that data remains within governance boundaries. Access management is handled with role-based controls and SSO integration, providing fine-grained permission management.

Detection

Panther leans into detection as code. Customers can write rules in Python, low-code, or structured query languages, manage them through GitHub or GitLab, and integrate them into CI/CD workflows. This approach enables testing, version control, and rapid deployment. The system normalizes logs into a standard schema, supporting real-time streaming detection, IOC extraction, and correlations. Analysts can also configure “signals,” lightweight indicators of suspicious behavior that do not trigger alerts but enrich investigations.

AI extends these capabilities by automatically generating detection rules, tests, and queries from natural language. Through the Model Context Protocol (MCP), Panther integrates AI agents into detection engineering workflows, dramatically reducing the time needed to build and validate detections from hours to minutes. Out-of-the-box detection content is also provided by Panther’s threat research team, with integrations available for threat intelligence sources.

Investigation

Panther supports investigations with entity pivoting, timeline correlation, and a case management module in development. Analysts can consolidate alerts, detections, and searches into a single artifact.

AI agents play a central role in investigations: they auto-triage alerts, summarize behaviors, highlight key indicators, and recommend next steps. They can also pivot to related behaviors not directly tied to the initial alert, automatically enriching investigations with real-time context. Customers can interact with AI via a Slack bot, making investigations more collaborative and accessible. Over time, Panther’s AI agents are expected to automatically run on every alert, generate risk scores, and support auto-closing or escalating incidents.

Reporting

Reporting features include customizable dashboards, PDF summaries, and scheduled SQL queries. Standard SOC metrics such as alert volumes, rule health, and detection coverage can be tracked.

MSSPs can use APIs for cross-tenant oversight, and dashboards support both standard and custom reporting. Operational monitoring highlights integration errors and failed detections, giving SOC teams visibility into pipeline health.

Strengths

Pricing model based on data sources rather than ingestion volume, enabling predictable costs.

Deployment flexibility in customer’s AWS environment or as SaaS.

Detection as code with CI/CD integration.

AI-powered analyst features for agentic triage, summarization, and recommendations.

Security and data residency controls through AWS regional isolation.

MSSP-friendly features and APIs for multi-tenant visibility.

Areas to Watch

Data-source licensing may be less efficient for organizations with many low-volume connectors.

Native log collectors are still under development in some cases (pulling logs from endpoints)

AI-powered functions are emerging but are still early in their development.

Strong AWS alignment may limit appeal for non-AWS cloud strategies.

SentinelOne

SentinelOne’s approach to advancing SIEM beyond traditional models is centered on strengthening AI features and use cases. Its Singularity AI SIEM addresses practitioner concerns with latency in legacy platforms, such as searches that only run after indexing, delays in handling large data volumes, and the tradeoff between speed and scale. The platform overcomes these challenges with a schema-free, no-index, columnar architecture that leverages parallel execution. This design keeps all telemetry “always hot,” enabling real-time detections, faster hunts, and responsive dashboards without delays. To mitigate concerns around higher retention costs, SentinelOne offers integration with the Singularity Data Lake.

On top of that data plane, Purple AI helps analysts by turning natural-language questions into investigations, auto-documenting findings, compressing time to respond, and even suggesting possible next steps. Automation is built in: Hyperautomation provides no-code, drag-and-drop workflows with direct response actions, reducing reliance on external SOAR tools and lowering operational overhead. Ingestion is open and OCSF-native, pulling in both structured and unstructured data from first- and third-party sources, including other EDRs such as CrowdStrike. The platform emphasizes real-time detection at ingest, in contrast to traditional SIEMs that require indexing before detections can be applied. Its “always hot” long-range retention keeps data instantly queryable for forensics and compliance. SentinelOne also takes a different approach to pricing by aligning costs to average utilization and queries, avoiding the peak ingest penalties common in legacy SIEMs.

SentinelOne’s recent acquisition of Observo AI underscores the growing importance of security data pipeline platforms in modern SOC architecture and the broader trend of combining adjacent technologies into the SIEM fabric. Observo AI’s pipeline capabilities can strengthen SentinelOne’s ingestion and enrichment layers, enabling broader integration support, real-time filtering, and inline detection before data reaches the SIEM or data lake.

Cost Effectiveness

SentinelOne applies a usage-based pricing model that charges on average daily ingest and query load rather than peak volumes. This approach eliminates unpredictable consumption-based pricing that traditionally penalizes peak ingestion and leads to cost overruns.

Deployment

SentinelOne’s AI-SIEM is delivered as a cloud-native SaaS solution and supports multi-tenant hierarchies to segregate data, making it applicable for large enterprises and managed service providers. The platform also offers compliance-oriented deployment options, including local VPC hosting in regulated regions, to address data sovereignty requirements for customers operating under strict mandates.

The deployment leverages the Singularity Data Lake, providing a unified foundation that spans endpoint, cloud, and SIEM functions with a consistent interface. This unified approach reduces the ramp-up time for analysts already familiar with SentinelOne’s EDR platform.

Detection

SentinelOne’s detection capabilities are powered by Purple AI and built around their Singularity Data Lake architecture. The system’s detection approach leverages AI-powered, agentic reasoning-driven capabilities that go beyond traditional rule-based detection. Purple AI acts as an intelligent assistant that can interact with the SIEM in plain English, effectively lowering the skill barrier for threat hunting compared to legacy SIEM approaches.

Singularity Hyperautomation’s drag-and-drop canvas turns any alert or Purple AI verdict into a playbook that can isolate a host, disable a user or call out to any REST API without writing code.

Investigation

SentinelOne’s investigation capabilities center around Purple AI, which provides autonomous investigation support and context-aware analysis. The platform eliminates the traditional hot/cold data storage distinction, meaning analysts can run ad-hoc hunts or build real-time dashboards on full-fidelity data without the typical lag or indexing overhead found in legacy SIEMs

The unified Singularity interface allows analysts to investigate across endpoint, cloud, and SIEM data from a single console, reducing the context loss and latency that adversaries exploit when analysts must switch between tools

Purple AI assists in investigations by providing plain English interaction capabilities, automated reasoning, and response recommendations, addressing the operational overhead concern from analysts.

Reporting

The platform provides reporting capabilities through its unified data lake architecture, enabling real-time dashboards and historical analysis. The always-hot data architecture ensures that reporting queries can access years of data instantly without the rehydration delays common in legacy SIEMs

Strengths

Schema-free, no-index, columnar design enables real-time detections, faster hunts, and instant dashboards.

Purple AI converts natural language queries into investigations, auto-documents findings, and suggests next steps.

Usage-based pricing charges on average daily ingest and query load, avoiding peak ingest penalties.

Cloud-native deployment, OCSF-native ingestion, Singularity Data Lake, and Observo AI create a unified platform.

Areas to Watch

While mitigated by the Data Lake, “always hot” long-range retention may drive higher infrastructure and storage costs compared to cold-tiered models.

Heavy use of AI-driven investigation and automation may raise regulatory and privacy concerns in sectors with strict compliance mandates.

Integration with Observo AI in a way that still keeps customers open from “vendor lock-in”

Vega

Vega is a security operations platform currently emerging from stealth with $65 million in seed funding. It positions itself as an alternative that can be used in parallel with a SIEM or as a complete replacement. Vega’s model emphasizes connecting directly to object storage or existing SIEM platforms, applying a federated detection layer, and enabling customers to run queries and detections across multiple environments without moving data into a single repository. For organizations that want to migrate entirely, Vega also offers its own storage solution as a direct alternative to existing SIEMs.

Vega challenges the traditional ingest-based pricing model by charging only for data it indexes outside of a customer’s existing SIEM, such as logs stored in S3 or Databricks. Data that remains in a SIEM is not double-charged when accessed by Vega. Because object storage is less expensive than SIEM storage, and Vega indexes data in place rather than duplicating it, organizations can expand visibility without incurring significant egress or ingestion costs.

Investigations in Vega are structured through issues and cases, allowing analysts to triage alerts, assign owners, and track status through resolution.

Cost Effectiveness

Vega provides a federated search option that can span over all existing SIEMs are data lakes the customer may own along with it’s own data lake offering. It inverts the traditional SIEM pricing approach by charging only for data that it indexes outside of a customer’s existing SIEM, such as logs stored in S3 or Databricks. Data that remains in a SIEM is not double-charged when accessed by Vega.

This approach is marketed as delivering cost savings of 70–80% compared to traditional SIEMs. Because object storage is cheaper than SIEM storage, and Vega indexes data in place rather than duplicating it, organizations can expand visibility without incurring large egress or ingestion costs. For enterprises with high data volumes or dark data already in blob storage, the model can substantially reduce total cost of ownership.

Deployment

Vega is deployed as a distributed platform that sits on top of existing SIMs, data lakes, or object storage. Its architecture allows it to coexist with tools like Splunk, Sentinel, or Elastic, while gradually taking over workloads. Customers can begin by augmenting their SIEM and eventually migrate more fully as confidence grows.

The platform uses site collectors for on-premises environments and can also ingest directly via syslog, webhooks, or endpoint brokers. Data is normalized to the Open Cybersecurity Schema Framework (OCSF) by default, ensuring consistency across sources. Indexes can be stored in the customer’s own cloud environment or in Vega’s storage cloud, providing deployment flexibility.

Detection

At the core of Vega is a federated detection engine, described as a “detection fabric layer.” This engine enables correlation and searching across diverse data sources without centralizing them. Detections can come from three sources: those created by users, those provided by Vega in its library, and those imported from external SIEMs. Rules from Splunk, Sentinel, or other platforms can be translated and executed within Vega, decoupling detection content from storage.

The detection layer relies on a KQL engine and is supported by AI-driven normalization and parser creation. Vega’s system imports threat intelligence feeds, generates detections on demand, and uses natural language to KQL translation to lower the barrier for threat hunters. Proprietary reverse-indexing technology allows queries on object storage to run quickly, with compression rates of up to 80% when optimized for speed.

Investigation

Vega structures investigations through issues and cases, enabling analysts to triage alerts, assign owners, and follow status through resolution. The “Explorer” module provides a gap analysis, showing which telemetry sources are missing and how prepared the environment is for specific attack scenarios. This supports proactive readiness assessments as well as reactive investigations.

An upcoming AI-assisted triage agent will populate notebooks and playbooks for common detections, automate first-level alert handling, and suggest remediation actions. Analysts can pivot between correlated entities, review historical event frequency, and apply playbooks for containment or response. Case management is integrated with role-based access control and SSO, supporting both enterprise SOCs and MSSPs.

Reporting

Reporting capabilities are supported through dashboards, visibility assessments, and investigation tracking. SOC metrics such as detection coverage, alert volumes, and case resolution are included, alongside custom dashboards for MSSPs managing multiple tenants. Documentation and tutorials are integrated into the platform, with AI-assisted features that help generate queries and guide analysts in creating new detections or dashboards.

Strengths

Distributed architecture that removes the need for centralized log aggregation.

Cost model that leverages inexpensive object storage and indexes data in place rather than duplicating it.

Federated detection engine that can operate across multiple SIEMs and data lakes.

AI support for parser creation, detection engineering, and natural language to query translation, reducing entry barriers for SOC teams.

Explorer module that provides gap analysis and telemetry readiness assessments.

Areas to Watch

The platform is new and still emerging from stealth, with market adoption and ecosystem maturity yet to be proven.

Organizations heavily invested in a single SIEM vendor may hesitate to introduce an additional layer.

Performance claims around indexing and querying object storage need validation at scale in real production environments.

Heavy reliance on AI for detection engineering and triage may create trust and explainability challenges for some SOCs.

SIEM market evolution - SACR prediction

Looking ahead, our team predicts that the SIEM market is likely to evolve along two distinct paths -

Decoupled Distributed Architectures

The first path centers on the emergence of decoupled, technology-agnostic SIEMs. These platforms will operate as a security analytics overlay that can query and analyze data wherever it resides, whether in a vendor’s data lake, object storage, or third-party environments. This approach provides flexibility, cost control, and choice. Some vendors are already pursuing this model, creating federated detection layers that decouple analytics from storage and reduce dependency on a single stack. Over time, such architectures may enable organizations to combine best-in-class analytics with best-in-class data management, unlocking both efficiency and innovation.

Convergence and Bundling with SDPP

The second path is one of convergence and bundling. Here, SIEMs increasingly become the central pillar of a broader security ecosystem, tightly integrated with security data pipelines platforms (SDPP), endpoint, identity, cloud, and network security tools. This strategy is exemplified by moves from CrowdStrike, Palo Alto Networks, and Microsoft, where SIEM capabilities are packaged with XDR, SOAR, and adjacent security offerings. For customers, this creates a coherent ecosystem with seamless integration, unified workflows, and simplified vendor management, at the cost of flexibility and greater dependence on a single provider.

Both directions respond to practitioner pain points but offer contrasting value propositions: openness and choice versus consolidation and cohesion. The market will likely continue to fragment along these lines, with buyers aligning based on their organizational maturity, risk appetite, and technology philosophy.

Conclusion

The future of SIEM is not about whether the technology survives. Rather it is about how it reinvents itself. Whether through decoupled architectures that provide flexibility in choosing where the compute vs storage act as analytics overlays across distributed data, or through tightly bundled ecosystems that unify the entire security stack, SIEM will remain central to the SOC. The question for security leaders is which path aligns with their vision: maximum flexibility and independence, or maximum integration and simplicity.

Hi Francis, I'd love to hear your thoughts on https://runreveal.com/ as a competitor in the SIEM space.

The fact that your reports constantly do NOT mention cisco cybersecurity or splunk (one of top SIEM vendors - in this specific report) makes me question your analysis.